What Your AdSense Benchmark Report Isn’t Telling You

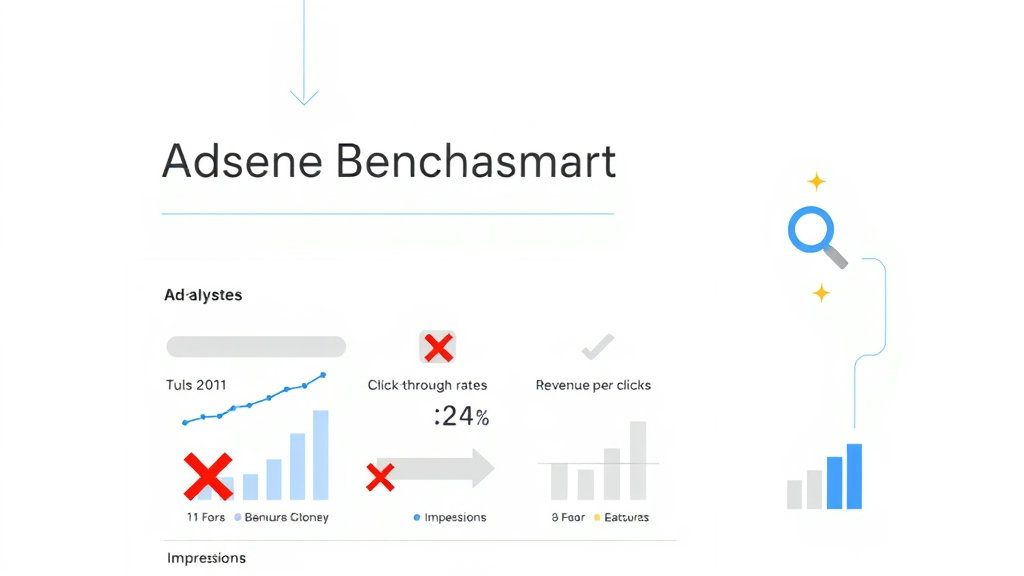

What counts as a “good benchmark” in the first place?

Here’s the fun part about benchmarking in AdSense: Google won’t tell you how they build the benchmark groups, only that it’s based on “similar publishers.” Cool, thanks. I once ran a niche forum with roughly 600 daily sessions, and it showed me a benchmark CTR of 2.3% — which was laughably high for a layout with no sticky elements, no video, and no social spill-in. Three months later, with exactly 0 design changes, my benchmark dropped to 0.9%. No explanation, just vibes.

In AdSense, your homepage ad impressions might weigh 10x as much as your interior article impressions when calculating your “relative performance.” The vague logic here is that highly viewable, typically above-the-fold units have more signal. But this isn’t documented anywhere, and nobody warns you that your best-performing slot is being quietly dismantled by Google’s normalization priorities.

Plus, placement and format biases skew these comparisons into oblivion. Responsive in-article ads don’t track the same way across themes, mobile UIs, or even regional network latency. There’s no magic number. Benchmarks are mostly useful to detect macro trends like “my RPMs have cratered across all page types, just like everyone else in this vertical.” But if you think you’re going to optimize toward outperforming the benchmark group like it’s some leaderboard, you’re chasing a mirage.

Why AdSense RPM jumps (or dies) without logic

This broke me once. Early on, I had an education site with moderate traffic and lots of mid-length posts—the kind that auto ads love to blast with 8 blocks if you don’t clamp it. The RPM was stable around the five-dollar range for weeks… and then one Friday it exploded by over triple overnight. Nothing changed. No viral post, no backend tweak, no campaign update. I assumed a bug.

Turns out, one of my pages was being picked up as a contextual match for a pharma ad blitz targeting education professionals. I only figured this out by dissecting page-level RPM using manual UTM tracking and matching against regional CPC data for that weekend. That level of detective work isn’t possible for 99% of people unless you’ve hooked up AdSense to BigQuery or exported daily logs into Google Sheets for processing.

What AdSense doesn’t tell you is how heavily weighted the top 1% of pages can be on a site-wide RPM. Even two moderately-performing articles can spike the average if high-value ads trigger. Likewise, when those advertisers pull their budget without notice, your RPM can nosedive the next day, making it look like something broke when nothing did.

Benchmarks don’t account for traffic quality decay

One rude awakening: not all traffic converts, and AdSense doesn’t care how much content lives on your page if the visitor is from a leaky, retargeted social slot with a bounce rate over 80% and a TTFB of Mars. The benchmarks love to pretend that traffic is a stable metric. It’s not. It curls up and dies on mobile-only deployments with bad CLS and slow render.

What threw me one time was a tutorial post that ranked top three for a beginner JS question. It brought in traffic, sure, but most people were students practicing multiple-choice questions. They weren’t ready to click mortgage offers. This caused my site’s CTR benchmark to make me look like I was underperforming—because my traffic was visitors who couldn’t actually participate in a functional auction. Google punished me in the benchmark view, even though the user metrics were exactly what I wanted.

I call this the “qualified traffic misfire,” and it’s not formally explained anywhere in the AdSense dashboard. But somewhere in there, the stew of time-on-page, scroll percentage, and geo-IP data filters through a campaign layer that decides when to show real money. You can have excellent CTRs and garbage RPM. You can have great RPM with 0.2% CTRs. None of that is explained upstream in the benchmark panel.

Auto ads can sabotage your benchmark stats

I had a layout where I manually placed fixed-size ad units between sections — clean enough, mobile-friendly, nothing on top of fragile elements. For fun (bad idea), I enabled auto ads with vignette and anchor enabled just to see how much money that added on mobile vs. disruption. They raised my revenue a bit, but when I checked “Compared to similar sites,” suddenly I was scoring low on viewability and above-average on page load delay.

Why? Because the anchor ad, even though native to Google’s own scripts, threw off the perceived content load timing on mid-range phones. Even worse, mobile visitors exiting from the anchor closed events were still counted as valid sessions but rarely registered scroll or engagement events. So I was technically showing more ads per session, but they were considered lower quality.

AdSense’s benchmarking logic doesn’t exclude the impact of extra junk that their own code injects. If you enable features like vignette, it can mess with whatever baseline the “similar publisher group” had—especially if they skew minimalist. Nobody warns you that benchmarking compares against groups that might not even use the same ad configuration logic.

Benchmark CTRs vary wildly by language group

This one hit me while working on two nearly identical content hubs: one in English, one in Spanish. Same layout, server, CDN path, even the ad placements. The English version showed a CTR that was about double the Spanish one, but more importantly, the AdSense benchmark group for the Spanish-language site claimed that it was underperforming by something like 35%. Which made no sense, since the click-through rate was consistent.

Eventually, I realized that the benchmark groups are siloed not just by vertical, but by locale and probably ad-spend density within the targeting region. So if you’re serving Spanish content but most of your visitors are from the US, your page ends up flagged as under-delivering because it’s not conforming to the expectations set by regional traffic from Spain or Latin America.

It’s one of those quiet edge cases. The language that dominates your HTML <html lang="..."> tag seems to influence your benchmark cohort. Even if half your traffic is from California and Florida, if your content is written in Spanish, it seems AdSense compares you to pages mostly visited by people in Mexico, Colombia, or Spain.

Benchmark RPM is easily skewed by sticky ad injection

At some point I started experimenting with sticky footers using sticky-container DIVs and in-viewport slot tracking to stick one unit late during scroll. This played nicely with lazy loading and bumped the average per-session ad requests. All fine. But within a week, my AdSense “Estimated Revenue” chart spiked, my site’s RPM jumped, and — paradoxically — benchmarking said I was now “below average” for estimated revenue. Watch that again: I made more money and still lost relative score.

The culprit? Most likely, other sites in my benchmark cohort already had sticky ads or higher session repeat rates. When I added the sticky spot, I got a bump, but not enough bump to pierce the normalization ceiling the group already had. So even though I gained revenue, others were gaining more, or the change wasn’t considered meaningful given my total impression count.

This is maddening because it means that benchmarking isn’t additive. It’s relational. If your peers just got smarter faster than you, even a solid improvement makes you look like you’re falling behind.

The AdSense benchmarking delay is longer than you think

I originally assumed the comparisons were made on a 24h basis. I was wrong by around two days. Turns out, AdSense updates the “Compared to similar sites” info in mild lags. Sometimes it’s 48 hours, sometimes longer — I’ve seen it stretch into a 4–5 day delay during months where advertiser budgets reset or when there’s a lot of invalid traffic filtering happening recursively.

This matters because any real-time tweak you make will not show up in your benchmark section until it’s well out of memory—and by then, you’ve usually made two more changes out of frustration.

An example: I disabled anchor ads for three days on mobile to measure clean CTR vs clutter, expecting better engagement. CTR ticked up. Bounce rate dropped a little. But the benchmarking still told me I was performing worse than peers on mobile ad visibility. Because I was, just not anymore — I had to wait four days to get the fresh comparison.

Tips worth stealing from benchmark misfires

- Use

?adtest=onfor layout tests — but also track those visits separately or your average stats will dip during experiments. - The “first ad slot seen” is massively influential in RPM. Move it earlier in DOM if your theme loads content slowly.

- Check your theme’s sticky headers — they often collide with anchor ads, and can silently suppress them on scroll.

- Compare benchmark metrics only by individual page type (not site-wide) via the AdSense Performance Reports tab for pages.

- Set URL-level path filters — comparing homepage CTR vs article CTR using benchmark averages is junk logic.

- Track specific metric shifts during known ad inventory valleys (e.g., week after Black Friday). It’s easier to distinguish seasonal group swings vs config issues.

- Don’t chase RPM; chase scroll-tracked engagement + time-on-page. Serve your humans and let the ads chase that.

Inclusions and exclusions aren’t transparent

There’s no way to see exactly which pages AdSense excludes when compiling benchmark stats. Are landing pages part of the comparison? AMP pages? Old posts with no traffic but stale ad code? Nope, no visibility. One account I managed had an issue where the top-viewed page was an /archive/ route that had buggy layout and didn’t properly display ads at all. But because that page had constant traffic, the entire site’s baseline viewability got punished.

Found in a raw log: “insufficient ad height – slot ignored” — this meant one ad unit never rendered, but Google still counted the page for benchmarking. Cool.

Worse, there’s no way to know if traffic surges from bots or spam referrals are being filtered before benchmarking is calculated. I once had a $4 RPM site jump to $11 overnight because a scraper hit 50k pages from AWS IPs that weren’t caught by AdSense invalid traffic scans until the following Monday. During that window, my benchmark obviously made me look god-tier. Then it all fell over.