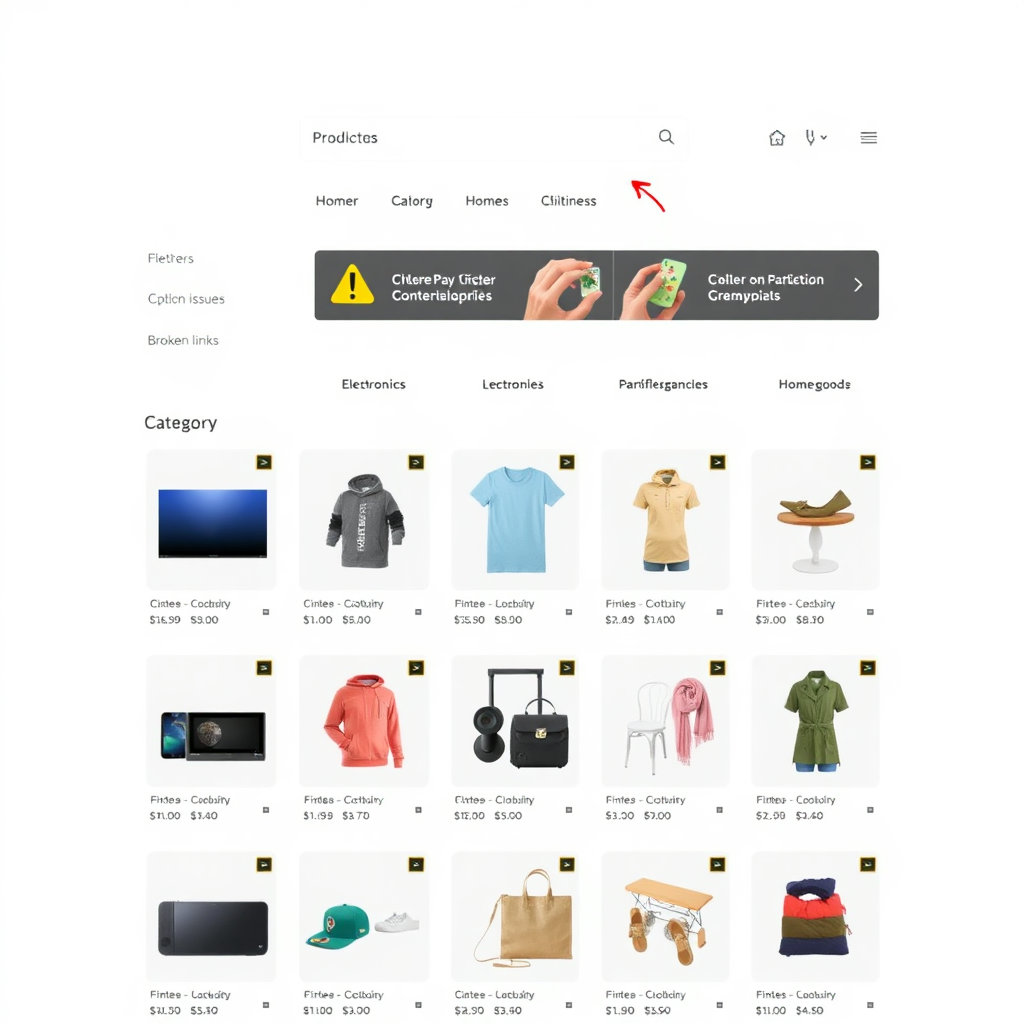

What Actually Breaks Category Page Optimization for Product Discovery

Disorganized Category Hierarchies Ruin Relevance Fast

I once inherited a store where three separate categories—”Headphones,” “Wireless Headphones,” and “Bluetooth Audio Devices”—all pointed to nearly the same 12 SKUs, just sorted differently. This seemed harmless until I reviewed behavior flows: half the users bailed after filtering because their refinements dropped them into duplicate content with janky URLs and zero context continuity. Canonical tags were technically correct but didn’t matter—Google just stopped indexing those pages entirely after a few weeks.

The real issue wasn’t duplicate content, though. It was semantic drift: each category suggested a different shopping intent, but the products weren’t bundled or filtered to match that. It confused search crawlers just as much as users.

If your category URL structure looks consistent but the actual content scope overlaps heavily, you’re going to have discovery and indexing issues that look like AdSense inefficiencies, but they’re actually taxonomy failures.

Fixing it meant collapsing loosely defined categories into broader hubs and leaning into dynamic subfilters, not hard-coded subcategories. Google sees your internal site hierarchy, especially through breadcrumbs and link context—not just your sitemap. If the user (and crawler) ends up on the same product detail page through 5 different silos, expect diluted scoring and mismatched queries in Search Console.

AJAX-Based Filters Can Nuke Crawlability Invisibly

Here’s where it gets sneaky: your faceted nav looks great, instant loads with no flash of delay, and categories update as users toggle filters. But go inspect that in the Network tab and see what’s actually going on—probably some kind of /filter/products POST call. Guess what Googlebot sees? Nada.

This wouldn’t be catastrophic if the base category page had internal crawlable links to at least the top popular filtered combinations—but most dev teams don’t do that. There’s this assumption that crawling then just relies on outgoing signal like sitemap entries. It doesn’t. If a filtered product list uses JavaScript to render and no linked fallback exists—it’s dead weight in SEO and AdSense visibility terms.

Add to this that many AJAX responses strip metadata, throw the canonical back to the root category, and completely shatter the concept of contextual subpages. Not only does Google ignore those variations, but so does your own site analytics unless you’re capturing virtual page views manually.

Quick sanity check if your category filters are breaking discoverability:

- Run Google’s Mobile-Friendly Test across 2–3 filtered views—not just the base page.

- Use Live Test URL in Search Console to verify JavaScript is even executing.

- If you’re hiding over 50% of products behind button states, crawl budget is being wasted.

- Every filter combo that matters should have real internal anchor links somewhere logically navigable—sitemap is not enough.

Thin Sorting Pages Load Fast—And Earn Nothing

This bit cost me around five bucks a day in lost RPM—per property. We set up various sort orders (like “Highest Rated”, “Newest First”) as their own crawlable URLs to target long-tail search intent. It worked for a bit, and pages started ranking—but then average engagement plummeted. It turns out many of those URLs were returning fewer than 5 products after sorting logic stripped them across filters, and AdSense had less content to contextualize.

AdSense punishes this indirectly: not by triggering compliance violations, but by serving broader ads with lower relevance and clickthrough. Low content density dilutes ad context, and you start seeing generic ad units that earn nothing. Worse, LazyLoad setups sometimes didn’t even trigger script rendering by the time AdSense decided what to fill.

The fix was absolutely not to add more products or slow down sorting logic. It was to wrap sort-order paths in a logic layer that checked item count before rendering canonical variations. No more exposing /category/sort/highest-rated if there weren’t actually enough reviews to justify that page.

Broken Pagination = Broken AdSense Matching

This is what caused our baseline CTR to nosedive. Someone on the front end team removed rel=“next” and rel=“prev” logic in a hurry to simplify SSR templates. Pagination worked fine visually, but AdSense suddenly thought all category pages were flat single screens—so it began testing different ad sizes and layouts under the assumption nothing else followed. The jump from 2.5 RPM to 1.1 happened in a week. No other changes.

And there’s no warning for this. Search Console won’t flag missing rel pagination. AdSense doesn’t explain mismatched layout targeting unless you’re testing with an AdX-level account. Our breadcrumb path was still intact; the content was still indexable. But the contextual signal from navigation depth vanished, and that flattened the earning potential.

Eventually I stumbled on it because I was rendering a user-agent test through Puppeteer and realized the Lux tag never loaded past page 1 on any crawl simulation. If you’re using Infinite Scroll without progressive rendering (or hard-coded anchor points), rel link headers are your only visibility-in-depth signal. Don’t strip them unless you’re replacing them with something crawlable.

Cross-Device Layout Shifts Wreck Mobile Ad Delivery

The acid test here was running PageSpeed’s mobile test while serving AdSense manually and watching CLS flip from low 0.1x to 0.4x just because of a minor flexbox bug in the product grid. You ever see AdSense swap a 336×280 with a 300×250 because spacing heuristics triggered a fallback? That’s not a policy violation, but it absolutely tanks performance.

Our layout collapsed a row if certain thumbnails failed to load fast, and that residual jitter made the bottom ad slot jump relative to the user scroll. That one change cost impressions where users thought they had reached the bottom already and bounced. And Google interprets that pattern as low-value traffic—which loops back into your bid weight across the whole network.

Add to this that ad sizes are still rendered server-side in many caching setups, especially on CDN-imaged product cards. Your fancy responsive grid might behave like a fixed grid to AdSense if you’re not rehydrating dimensions with Resizer.js or something similar.

Real-World Accessibility Oversights That Trigger Policy Enforcement

We got hit once with an Accessibility policy warning—the vague type that just says something like “Must follow accessibility standards for page navigation”. For three days, I thought the issue was alt text. Nope. It was tab order. Category filter dropdowns weren’t keyboard-accessible. No indication of focus, no ARIA attributes. Junk HTML with nice-looking CSS.

AdSense doesn’t manually audit unless it gets pushed from another part of the stack—like Chrome UX reports or Lighthouse audits leaking into trust signals. But once it’s flagged? You’re looking at partial ad disabling even if the rest of the page validates just fine.

The terrible part is: our category pages were correct semantically. H1s, link labels, even skip nav. But VoiceOver users couldn’t select a filter. And since most of our pages loaded entirely new product sets via JavaScript calls on filter change, it meant assistive users were locked out. That behavior apparently crossed the line into render denial—not just poor UX.

We fixed it via 6 core changes (only 2 of which came from docs):

- Every filter button got

role="button"andtabindex=0 - Added onKeyDown events for Enter/Space behavior

- ARIA-live region updated on filter change

- Contextual label injected into filter overlays

- Filter selected state reflected in screen-reader text

- Mobile drawer nav allowed swipe and keyboard nav equally

The wild part? Once that was deployed, we actually saw a slight boost in SERP impressions—not just compliance fixed but better engagement traceability across devices. Never confirmed if that was cause-effect, but it felt real.

Googlebot Ignores Ad-Script Blocks Far Earlier Than You’d Think

This is something I didn’t expect until I experimented. If you’ve got a category page where you’re manually injecting AdSense with latency logic (like waiting for the products to finish loading, then adding the ad), be aware: Googlebot will skip right past that if the main content is visible first. Even if you load the ad 100ms later, it often won’t see it.

I caught this by comparing DOM snapshots using a rendering test out of https://cloudflare.com with their Browser Insights tool. The ad container was injected after .product-grid rendered—and it was dropped entirely from the snapshot. Not just undecorated—flat-out missing in the frame Google captured.

So while you’re thinking “wait for user context,” Google’s thinking “ad not persistent enough, must be bloat”. This behavior gets worse if the ad slot moves on-scroll or if you’re using IntersectionObserver with aggressive thresholds. I’m not saying you should eager-load every ad, but if your monetization placement relies on full grid-rendering before even touching the ad tag, expect crawler-wide under-performance.