Untangling Google AdSense Invalid Clicks and Revenue Loss

AdSense’s Invalid Click Detection Isn’t Actually Precog

There’s a weird myth newer publishers carry — that AdSense’s invalid click protection is some kind of real-time AI shield like Minority Report. It’s not. You can get earnings clawed back from clicks that happened 30 days ago, or even longer, while assuming everything’s fine. It mostly retroactively flags behavior that fits traffic profiles it didn’t like after running pattern batches.

I once had a week where RPMs looked too good to be true — and yep, invalid click deductions wiped maybe 60-something percent of it next payout cycle. The kicker? The traffic was all organic — just shared to a subreddit that, apparently, looked “non-authentic enough.” They’re not rejecting the pageviews. It’s the click behavior that scored weird in their filters, and it doesn’t take a click farm to trigger that.

“User clicked multiple ads within milliseconds” — buried inside the log line of a policy violation email that clued me in. Actual humans don’t behave like API bots.

Bottom line: Don’t rely on AdSense to detect and block fraud before it hurts your metrics. It flags click patterns after the fact. And if that behavior looks too dense or mechanical — even from legit users — expect clawbacks.

Cloudflare Actually Helped Me Spot a Click Bomb

I wasn’t even looking for fraud — I was debugging my cache settings with Cloudflare, noticed a spike in one of my JS endpoints, and down the rabbit hole I went. That traffic pattern didn’t match botnet scale, but it was consistent and repetitive from a few IP clusters. Turns out someone decided to “help” me by sending automated traffic to my blog posts and triggering ad clicks. Totally not suspicious at all.

Enabling Bot Fight Mode only helped a little. I had to write a Worker that compared referrers and killed requests with stupidly fast repeat behavior. Honestly, the signals were subtle. Some tricks I used:

- Look for fingerprint strings in headers from free proxy services.

- Compare Cloudflare’s JS challenge failure rates to endpoint usage spikes.

- Trigger JS-level tracking of ad slot visibility per post (e.g. via IntersectionObserver) and cross-reference with real click logs.

- Throttled identical click events within 10 seconds from the same IP/session.

- Wrote a short-term blocklist that only kicks in under traffic anomalies + high ad CTR combined.

The catch? None of this tells AdSense anything directly. It just prevents my own layout from leaking click entropy through the cracks. That helps them see a cleaner behavior stream, which means fewer flags.

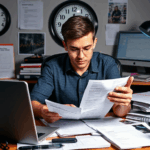

Random Layout Shifts Can Amplify Invalid Click Risk

Okay so layout-shift-induced misclicks aren’t just a UX problem. They completely destroy your ad interaction heuristics. A floating ad container pushes content mid-scroll, user goes to tap a video play button — and “clicks” the ad instead. Now multiply that by hundreds across mobile crawl sessions. Welcome to gray zone click inflation.

Here’s where I screwed up: I used a non-sticky top nav on mobile, and inserted a responsive ad block underneath it that loaded after page paint. On slower phones, it briefly appeared AFTER the top nav collapsed, reflowing all content downward. Boom, accidental clickfest.

This Is a Platform Bug Disguised as Design Freedom

AdSense didn’t warn about the layout shift directly. They just flagged vague “invalid activity”. I only connected the dots after isolating a 5% CTA click-to-ad-click match ratio in Hotjar. AdSense doesn’t know your layout is sketchy — it only reacts to click behavior that looks misaligned with viewport and ad slot life cycles.

Once I added a min-height to the ad container and pre-reserved space before script load, problem solved. Not permanently — but it bought me enough time to stop the fallout.

Disabling Auto Ads Doesn’t Stop All Dynamic Inserts

If you’ve disabled Auto Ads but previously enabled it sitewide, you may still get residual dynamic placements via CSP-cached logic or partial enforcement at the crawler level. I had a case where Googlebot rendered ads in sidebars that no longer had Auto placements declared, but were still structured like the original template.

This showed up when I tested with Google’s Ad Experience Report, and somehow “Page covered by ad” was triggered. Zero divs inserted via code. Only when spoofing the user-agent as a slow 3G device did I see old behavioral layouts sneaking back.

The fix? Manually de-register auto ad scripts in your <head> AND clear the site from your AdSense site settings, then re-add it after 48 hours. AdSense caches the structure it previously tested against, and it takes actual time to re-crawl and acknowledge script removals. There’s no official doc saying this — support forums are full of vague “wait and see” threads.

When AdSense Said “No Issue Found” but It Clearly Was

This one still pisses me off. I submitted a manual review because I got a site-level warning for “invalid traffic” — even though I’d just throttled traffic sources and purged any questionable embeds. The review team returned a generic response: “We found no issues and your account remains in good standing.”

Two weeks later, I get a deduction for the exact same timeframe. Turns out, they were just slow to update their risk model. The review meant nothing. They didn’t reinstate the lost revenue, just muted the alert.

Here’s the kicker — I finally added manual campaign tagging to every inbound source (e.g., newsletter, Twitter, DEV, etc.) and set up parallel logging of link CTR vs. ad CTR. That’s when I realized one of my shortlink tools was periodically going down and redirecting to my homepage fallback… where a high-paying ad unit lived. Boom: rogue click cluster.

“Click bottleneck from redirect jump behavior” — not in any doc, but straight out of my analytics dashboard anomalies.

JS Debug Logs Gave Me My Best Lead Yet

You can’t tap into AdSense’s internal event tracking, but the client-side JS of your own page is fair game. I started logging everything at the point of ad render: realistic viewport positions, user interactions, page scrolls. This basic snippet helped me isolate auto-click vs. legitimate engagement:

window.addEventListener('click', e => {

const x = e.clientX;

const y = e.clientY;

const time = Date.now();

console.log(`Click at [${x}, ${y}] @ ${time}`);

});

Once I combined that with Google’s own adsbygoogle slot listeners, I could see a rough pattern of when and where users were interacting with slots. If click time occurred before full slot visibility or after DOM mutation — big red flag.

I also disabled pointer events on fallback ad wrappers during slot loading. That stopped half-baked clicks from triggering when ad units failed to fill and just left empty divs.

The Misleading Comfort of “Estimated Earnings”

The name gives it away — but if you’re going by estimated earnings to project revenue, you’ll eventually get smacked by invalid activity deductions. Late-cycle invalidations are the worst kind. You think you’re earning triple RPM, you start spending against it, and then — thwack — demonstrably wrong click data erases a chunk of it days before payout.

The UI should have a warning system for volatility over a certain threshold. Or at least flag pages with unusually high page CTR. But nope — you only find out when your last 3 days’ worth of earnings go missing without context. Doesn’t help that invalid clicks are deducted globally, so you don’t even know which page took the hit.

I’ve started tracking a ratio of content-CTAs to ad-clicks. If too many sessions are skipping CTA clicks and going straight to ads, it’s probably not natural behavior.

Split-Testing Layouts for Ratio Health Helped Stop Fraud Too

This was a side project. I ran a split test where half the users saw inline text links, and half saw content cards with footer CTAs and a banner ad underneath. Over 10,000 sessions, the text link variant drove more conversions — but had 3x the invalid click rate. No clue why. Probably felt tighter, more tempting, or blurred boundaries for some users.

So now I feed layout variants through a basic model: forced-scroll + CTA length + ad slot DOM distance + click latency. If too many fast, same-slot clicks cluster on one variant, I kill it. Not perfect — but it’s saved me from triggering automated penalties.