Real Things That Break in API Management Platforms

Dealing with Token Expiration Across Mixed API Gateways

Here’s what nobody tells you right away: managing OAuth2 access tokens across multiple API gateways doesn’t just suck, it drifts into surreal. I once had Apigee and AWS Gateway both wrapped around the same backend microservice, and the token refresh logic started stepping on its own tail. For whatever reason, Apigee would happily pass expired tokens through to the backend, while AWS Gateway just 403’d instantly with no context — not even a friendly error body.

The kicker? An expired token wasn’t consistently flagged by Apigee’s policies if the expiration occurred in the last ~60 seconds. Turns out it uses its own time window buffer (not documented, at least not clearly), and if JWT validation is wrapped in a custom JavaScript policy, it might treat stale tokens as valid for just long enough to break downstream logging. Cost me an afternoon blaming the wrong microservice.

I now run an extra middleware service that double-checks tokens and logs skews. Ugly, but at least I know what failed when it fails.

Edge Handling: Misbehaving Rate Limiting Logic

Rate limiting sounds innocuous until you try to serve a multi-tenant system and your management platform (say Apigee or Tyk) clusters usage across IP addresses rather than user identities. We hit this on a fintech prototype with a shared corporate proxy — suddenly all of marketing’s test accounts were eating each other’s limits.

Apigee’s quota enforcement looks per API key by default, but if you rely on client IP rules, it’s game over in NATed networks. We ended up embedding an internal user ID in a custom header and writing a per-user quota in shared Redis sessions, completely bypassing what was supposed to be built-in functionality.

That implementation held up… barely. At least until midnight UTC, when all daily quotas reset and we found out our Redis instance didn’t garbage-collect old counters, leading to stale key overflows.

Postman Does Not Simulate API Gateway Behavior Accurately

This one’s a classic. Everyone builds their tests in Postman or curl, maybe wraps them in Newman and CI/CD, you get green checkmarks and think life’s good… and then your API call fails in the live prod environment. Why? Because API management platforms often rewrite requests — sometimes headers, sometimes payloads, and yes, even methods under obscure policy layers.

“Request method was ‘OPTIONS’ when expecting ‘POST'” — Apigee log, 2AM deployment.

Happened because Apigee inserted an automatic CORS preflight handling step and stripped the original POST call when the origin wasn’t whitelisted. That extra OPTIONS call never hit my internal logs, because the gateway dropped it upstream. I had to spin up a Cloudflare Worker just to log transparent headers to see what was truly happening.

If you want true-to-platform tests, you can’t skip real-world calls through the actual gateway endpoint. No local mock protects you from platform-side header mutation.

Undocumented Edge Cases with API Developer Portals

If you’re letting devs onboard themselves via a built-in API developer portal (like the one from Apigee or Kong), test it like a troll. I’m serious. One of our test users managed to request an API key with an expiry date in 1970. What was incredible wasn’t that it got approved — it worked, and the key was active.

Turns out Apigee stores expiration metadata separately from the credential state, and if the expiration is in the past yet the key object status is set to “ACTIVE,” the key still works via the quota enforcement engine. We weren’t even checking expiry from the dev portal UI; we assumed the display logic was tied to validation.

We now inject a Cloud Function hook before approval that parses every new dev app and hard-rejects anything that smells like a time-travel timestamp. Still wish there were an actual warning instead of blind acceptance though.

Handling WebHooks in API Management Layers

Most API gateways aren’t designed to deal with webhooks correctly. They fake it, kind of. What I mean is, your outbound webhook POSTs — especially those being batched from inside server-side policies — don’t always inherit the original request context. So, things like correlation IDs, auth headers, tenant IDs? Poof.

I noticed our webhooks from Apigee were flipping tenant IDs randomly across calls, because the backend job triggered them in parallel across execution environments, and the gateway didn’t persist the original headers. Our backend engineers went insane trying to trace why events landed in the wrong tenant queues. The fix involved bundling tenant metadata into the body of the webhook, even though originally our plan was to pack everything into headers. Not ideal, but at least we could verify integrity on the receiving side.

Also worth noting: some webhook URLs choke if you don’t include a User-Agent. Twilio’s test endpoint stub trashed every inbound POST until I figured that out. Absolutely not mentioned anywhere in their quickstart.

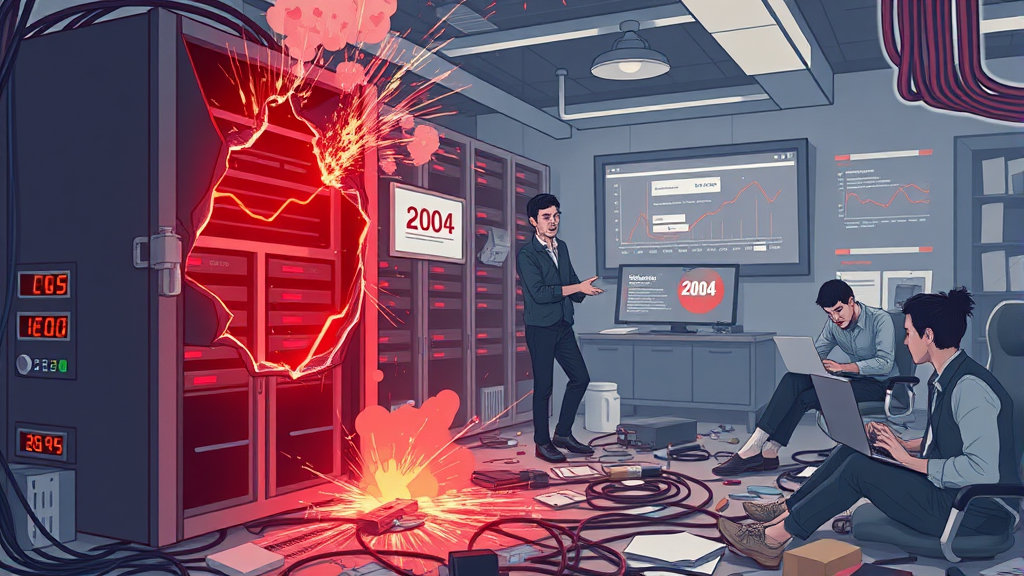

Management Dashboard Metrics That Lie

Platform dashboards are a lie — at least when they’re near-realtime. In one case, Tyk underreported 429s during a load spike because it didn’t log throttled traffic separately. I was looking at graphs that said we served tens of thousands of requests in a spike, but our adoption metrics never moved. Turned out half of them were being blocked, but those weren’t surfacing in the usage logs at all. Only the logs in the control plane had the full picture.

Here’s what I do now:

- Mirror production traffic in a debug tenant, strip sensitive data, log everything in plaintext temporarily

- Set up alerting not just on 5xx errors but on log volume drop-offs (because a drop to zero is often a bigger deal)

- Always double-check quota reports with actual backend hits — not the dashboard’s summarized count

- Context match the control plane logs with per-API invocation IDs if your gateway can inject those — not all do

- Disable sampling on logging while investigating behavioral bugs, then re-enable once stable

I still don’t trust the nice green charts no matter how pretty their gradients look.

Cross-Platform API Management Routing Gone Wrong

We had a client who insisted on using proxy routing rules from both Azure API Management and Cloudflare for different API paths… on the same custom domain. Predictably, origin routing turned into a hell stew. Azure added its own path rewriting rules, and Cloudflare cached one of the rewritten responses perpetually (since the origin never bumped the cache-busting headers).

Users got an outdated response for almost two days and we couldn’t find it at first because only Cloudflare’s cache analytics surfaced it. Azure was logging the proper call — it just wasn’t being hit. Also, turns out Cloudflare normalizes header case but Azure’s logging system treats case-sensitive headers as distinct, so we saw different traffic metrics depending on which monitoring dashboard you asked.

The fix? Split subdomains and avoid path-based routing entirely. Service-level DNS routing may seem sloppy, but it avoids header/caching edge cases that aren’t documented anywhere. This was just supposed to be a quick MVP—they still haven’t moved off that domain split.

Client-Generated API Keys Are a Mistake

I get why people do it — async workflows, user control, etc. — but if clients are allowed to generate their own API keys without a validation proxy, bugs emerge that are pure chaos. One of our integrators managed to base64-encode a UUID incorrectly (not using URL-safe mode) and passed it into GCP’s API Gateway as a key. The key matched against nothing, obviously, but the gateway didn’t say it was invalid — it said 403, unknown identity — which we assumed was a permissions error. Cost two full days tracing.

Eventually someone dumped logs from an IAM call and spotted this clue buried deep in the error payload:

{

"status": "PERMISSION_DENIED",

"debugInfo": {

"reason": "API_KEY_MISSING",

"domain": "apiGateway.auth"

}

}This didn’t surface in the client response — it came from the IAM audit logs. So yeah, if you’re letting clients supply their own credentials, wrap that in a staging validator, or don’t expect clean failures.