Real Fixes for Google Manual Penalties That Actually Work

Don’t Assume It’s Algorithmic When It’s Manual

This is what gets most people: they think their traffic dropped because of another one of those core updates. But in the email you probably forgot you filtered, there’s a message from Google Search Console with the subject line you never wanted to see — “Manual action issued.” I’ve had clients ping me after sitting on that for two weeks wondering why their RPM tanked. You wouldn’t believe how often that happens.

Quick check: in Search Console, go to Security & Manual Actions → Manual Actions. If there’s anything listed there, you’re not dealing with an algo hit — you’re in actual trouble. Different ballgame entirely.

Fun fact: manual penalties don’t always show up for every property variant. I’ve seen it on https but not www. Set up all five variations — http, https, www, non-www, and the wildcard property — just to be safe. Google isn’t always logically consistent.

Common Manual Penalties and What They Actually Mean

These are usually phrased in the dry tone of “user experience concerns” or “unnatural outbound links,” but here’s how it usually breaks down in reality:

- Thin Content with Little or No Added Value = you bulk imported AI-written pages and thought rephrasing headlines would be enough.

- Unnatural Outbound Links = you sold dofollow links and didn’t even try to cloak it.

- User-generated Spam = your blog comments or forums are a Russian casino.

- Structured Data Abuse = you put review schema on everything, even things that aren’t reviews.

What they don’t tell you: sometimes these trigger off ancient content. I had a penalty that turned out to be caused by a deeply buried 2015 guest post link to a now-defunct vape shop. Took forever to locate. That mess knocked the whole domain out.

How Small Plugins and Auto-Importers Might Be Sabotaging You

Here’s one that fried me last year. I had a content curation plugin running on a subdomain-shaped content farm experiment (don’t ask), and it helpfully auto-published RSS snippets with full links back to random external sources. Sources that, at some point, had turned into spam portals. I didn’t even know it was still running — that site had 12 sessions a day and I hadn’t touched it in months. Manual penalty hit the whole domain, not just the subdomain.

I’ll just say: don’t rely on plugin UIs to show you everything that’s happening. Sometimes they’re cron-timed or cloud-scheduled in the background and don’t show in WordPress logs. The most dangerous plugins are the ones you forgot exist. Run a tool like Cloudflare DNS logs or a proper auditing scanner to track outbound requests.

Google’s Own Timestamps Can Be Misleading

One of the weirdest platform inconsistencies I’ve run into: the date shown in Search Console for when the penalty was applied isn’t always correct. I exported manual action entries via GSC’s API and compared it to traffic logs, and it was off by almost five days. I think they store when the review was completed, not when the algorithm disabled indexing.

Also — and this feels criminal — there’s no log of when you submitted a reconsideration request unless you track it yourself. It disappears into the void until they respond, and you just kinda sit there wondering if you fat-fingered something and it never sent. I literally added calendar events in Google Calendar to remind myself I’d actually submitted one.

Reconsideration Request Pitfalls I Learned the Dumb Way

What They Don’t Tell You

Here’s what doesn’t work: groveling, blaming interns, acting confused, or pasting rewritten content chunks with zero Git or URL diffs to prove actual fixes. Google reviewers want short, measurable, boring cleanups. Like:

- “Removed 78 links manually across 54 posts. Here is the Google Sheet with before/after diffs.”

- “Deleted all tag pages with thin content. Archived sitemap updated.”

- “Disabled content plugin X and blocked /autoblog/ in robots.txt.”

And you must show intent. Rewriting 10/1000 pages doesn’t count unless you explain the rest are queued or removed.

Here’s my actual reconsideration chunk from a penalty I reversed in 2022 — stripped of domain/user data:

We identified and removed outbound links that were previously sponsored but not disclosed. Specifically:

– 28 links removed entirely.

– 12 replaced with rel=”sponsored” or rel=”nofollow”.

– Affected posts edited on 2022-08-17 to 2022-08-19.

No fluff. No drama. Just show that you know what went wrong and that you killed it hard.

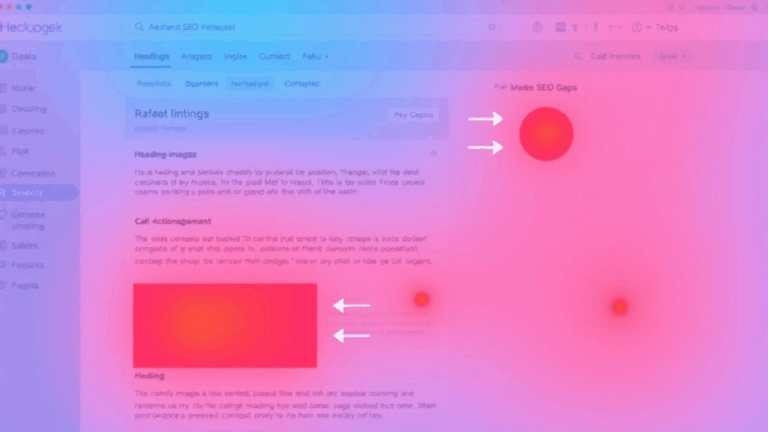

What Google Doesn’t Document About Manual Action Scopes

There’s a nasty undocumented edge case where the manual penalty doesn’t hit EVERY page equally — and you can’t see which ones are impacted via Search Console. I figured this out while stress-debugging a local blog network where only specific categories dropped out of site:

site:mydomain.com/tools/ → still indexed

site:mydomain.com/blog/ → all deindexedThis was a “partial match” manual penalty. It’s not explained clearly anywhere, and it’s not obvious even when you have access to the penalty in GSC. The UI just says “partial matches” in passing. But leftovers of your site can still rank — enough to confuse you if you’re only watching totals.

Use Advanced Search Operators like inurl: or site path filters in Ahrefs/SEMRush to map which content blocks actually got ghosted.

Crawler Blockers Can Delay Penalty Reversals

If you’re using a WAF or bot-blocking setup (shoutout Cloudflare Super Bot Fight Mode), be aware that Google’s review team might be hitting your site with different IPs from standard crawling bots. I say “might” because I submitted a reconsideration once and got a cryptic rejection — turns out the /post/ URLs were throwing 403s to Google reviewers because I forgot I’d rate-limited a chunk of ASNs during a scraper siege.

Pro tip: temporarily relax Cloudflare firewall rules or rate limits, and log ServerName headers for a few weeks after submitting the request. If you see a surge of US-based, non-Googlebot traffic hitting flagged URLs, that’s probably the human review lag kicking in.

AdSense Impacts: Even Non-Monetized Pages Can Tank RPM

I didn’t expect this one: I had a category of pages that weren’t monetized (no AdSense scripts on them), but once that content hit a penalty, the rest of the site saw tanked RPM—like 40% drop sitewide. Apparently, Smart Pricing or generalized trust scores apply penalties based on domain-wide quality signals, regardless of whether ads are visible on flagged pages.

Anecdotally, I copied some content off a penalized domain to a fresh one, stripped tracking, and recreated AdSense separately — same traffic, ~2.1x RPM. Took notes: identical content, lower apparent value per impression when coming from previously penalized domain. My hunch is that even after the penalty is lifted, AdSense quality index lags behind for months (maybe quarters?).

Unverified hunch:

Penalty history might shadowban domain-level RPM caps, even post-recovery. No direct statement from Google, but I’ve tracked similar patterns on 4+ accounts now.

What Logs Can Actually Help You During a Manual Action

Your typical web analytics stack isn’t built for tracing manual action causes. You need log access or at least enhanced path-level crawl maps. Here’s what helped me find bad actors faster:

- Exporting Apache/Nginx logs with full referrers to trace outbound link patterns.

- Using Cloudflare’s analytics to see requests to old plugin paths that injected auto content.

- Diffing historical sitemap.xml files to track zombie pages showing up in indices.

- Comparing pre- and post-penalty crawl behavior with Screaming Frog (especially for canonical bloat).

- Checking robots.txt fetch responses through GSC’s ‘Test robots.txt’ tool — sometimes Content Delivery Networks serve older versions that hide recovery fixes.

As weird as it sounds, also peek at your archive.org diffs sometimes. That’s how I found a shady JS injection that only happened via an outdated snippet in my site footer — active between only two weekly crawls. Total ghost bug if you’re only looking at raw content.

Manual Recovery Usually Takes Longer Than You Want

If you’re lucky, you’ll get a reviewer in under a week and a clear resolution. But in most cases? You’re looking at 2-4 weeks minimum. There are horror stories of 6 weeks+ before any GSC message returns. Multiple reconsideration rounds are common, especially if you don’t nuke things from orbit on the first attempt.

And yes — goofy as it is — some people swear aggression works better than nuance: deleting entire content types sometimes gets you reinstated faster than surgical editing. I’ve tested it, and yeah, deleting a whole subfolder of affiliate junk worked for me faster than explaining its legitimacy in 700 calm words.

So yeah, bring a hammer, not tweezers.