How Personalization Engines Get It Wrong for Email and Web

Why Your Web Personalization Triggers Feel Late

So the first time I set up a personalization engine on a news-heavy site, we were pulling clickstream data from our CMS, piping it through a semi-okay ETL, and then feeding it into the content recommendation engine with a 5-minute delay. Which felt harmless. Until I watched a user land on a breaking story, linger for 6 seconds, and then get served “Also Trending: Bernese Mountain Dogs” instead of the follow-up article.

The problem isn’t always latency itself. It’s how many systems do weird buffering or skip logic during ingestion. E.g., with Dynamic Yield or Fresh Relevance, if your site’s tagging sends a partial or malformed attribute, the engine may skip segmenting that user, and THEN suppress all follow-ups for that visit. Silent fails. No warnings unless you’re combing logs. Love it.

I eventually started sinking real-time events into a Redis layer first and used that to patch-trigger personalization. Janky? Yep. But at least I could guarantee the user’s current session wasn’t completely out of sync with whatever the engine thought they were doing two pageviews ago.

Personalization on Email Click-Throughs Only Works Half the Time

Here’s the fun part: when someone clicks from your email, they often land on a URL with so many UTM tags that your on-site personalization framework considers them first-time visitors. I ran into this with Iterable × Optimizely — even though we were stitching visitor IDs via cookies, the extra redirect parameters flipped the behavioral segment enough that half the platform logic failed silently.

If your personalization engine isn’t sticky enough (or you don’t have cross-channel ID stitching configured properly), the user starts from zero on the site — even if they were just there three minutes ago. Their prior browsing, intents, even cart-stage logic? Gone.

This edge case gets messier when you layer in AMP emails or link shorteners with tracking parameters. Suddenly you’ve got one user showing different content depending on what angle they entered from. It was maddening watching the logs and realizing we’d been segmenting the same person into two buckets.

When A/B Testing in Personalization Engines Lies

One of the nastiest surprises I ran into with web personalization testing (this was on Adobe Target, but others aren’t angels either) was the trap of “auto-allocate” or “AI-powered” test groups. In practice, these features bias traffic — sometimes mid-test — to whatever variation performs better early on. Meaning: any chance of getting clean comparative data is gone. You’re not A/B testing at that point, you’re A/95%-bias-with-house-edge/B testing.

Even worse, some engines will change the weighting in runtime without telling you at deployment. You have to dig through change logs or connect raw log data to sessions to even reconstruct what the weightings were hour-by-hour.

The best workaround I’ve used: explicitly assign test groups and manage test bucketing outside the personalization engine. Use a URL param or cookie for group allocation, then trigger variants conditionally. Yes, that defeats the point of managed testing. But at least it doesn’t hallucinate results.

Email Engines That Personalize Off the Wrong Field

Most personalization bugs in emails happen between 12 and 2 AM on launch night. One that still haunts me: using a dynamic block for “last-category-viewed” based on the user profile in Klaviyo. But — and I still can’t believe this — the profile was syncing from a Shopify field we didn’t fully validate, and in 8% of cases the last-category was actually a refund reason. So users got: “You might also like: ‘Did not match description.’” Mercifully, most people didn’t notice.

Turns out if you’re syncing custom fields through a third-party connector, double-check whether your field is typed consistently (some ESPs will interpret integers as strings or vice versa), whether it’s multiline by accident, and whether it’s being overwritten by low-trust events like support threads or feedback forms.

Also: if you’re sending dynamic images via OpenGraph-style parameters (like product shots), make sure the fallback asset isn’t a generic stock photo. Twice now, I’ve seen broken personalization drop in a 400×400 smiling developer photo that has absolutely nothing to do with ecommerce.

Five Personalization Bugs That Took an Hour Too Long to Find

- If your recommendation widget shows “inline” above the footer, and your site uses lazy-loaded placeholders, sometimes the DOM doesn’t trigger the renderer without a scroll event. So users never see adapted content — and attribution drops blamed on ‘low converting segments’.

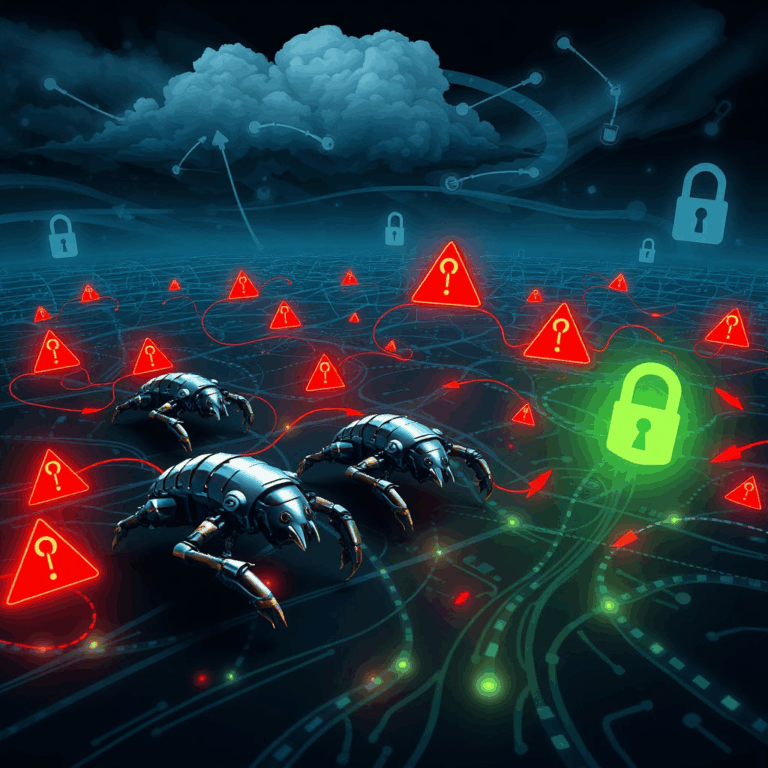

- On iOS Safari, third-party iframes targeting campaign logic have a nasty interaction with Intelligent Tracking Prevention. If your personalization key uses a first-party cookie set via JS in an iframe, there’s a decent chance it’ll disappear mid-session.

- Segment-based previews in email test tools (especially Iterable and Mailchimp) often cache the first value they see. So when QA is checking 10 variants, 6 of them look the same unless you hard-refresh every time.

- In some engines, regex matches for URLs in personalization don’t properly escape query parameters. A rogue equals sign will bork the entire rule. Test with wildcards like your life depends on it.

- If users bounce and return within 10 minutes, and your engine buffers session state by timestamp, your logic might treat return visits as first timers. Cross-reference session lifespan configs against bounce thresholds or risk feeding repeats cold openers.

The One Quote That Finally Made Sense of This Stuff

“Personalization engines aren’t personalization. They’re probabilistic guessers driven by normalized behavior subsets.”

I found that buried in an old Medium post by a former PM for an email personalization engine I won’t name. But it clicked hard. Most of these engines don’t know “who” the user is — they know what bucket the user shared characteristics with when your last data sync ran. That’s why it all feels brittle.

You wanna get closer to real personalization? Pipe the user’s prediction context (not just actions) into the system. What they’re likely to do now. And pin it to the live session, not some cold-profile blob updated at 2 AM.

One Line of JS That Helped Way More Than Expected

window.userSeg = JSON.parse(localStorage.getItem('recentViewData') || '{}')I shoved that into a preload script after realizing our CDN was stripping cookie data on some mobile subdomains. It let me stitch experience segments from localStorage alone and seed personalization variations based on what product the user last hovered.

None of that would’ve worked if we weren’t already caching interaction crumbs on the frontend. But once I did, I could bypass most of the unreliable profile merges the ESP kept screwing up. It’s not elegant, but it didn’t break — which is more than I can say for most middleware setups.

Personalization Engines Still Don’t Respect Route Changes

If your site runs a single-page app (SPA) with something like React Router or Next.js’s client-side routing, then out of the box, most web personalization engines don’t fire on route change events. Why does that matter? Because unless you manually re-trigger the personalization lifecycle, your visitor will browse five different pages and still see homepage content.

We missed this for months on a custom-built furniture site. Hundreds of sessions were getting bundled under the wrong impressions, and the engine was adapting dynamically — but only to the first page load. Segment had documentation about this, but some other tools (like MoEngage or Exponea) made you infer the need to hook into pushState events yourself.

The fix was simple once we knew what to look for: hook into the router and fire a synthetic URL change event that re-processes user context. It sounds obvious after you spend three hours wondering why your checkout offers were still showing bedding recommendations.