How I Untangled AdSense Invalid Traffic Flags Without Losing My Mind

AdSense Says My Site Has Invalid Traffic — Now What?

The first time I got the “limited ad serving” warning, I assumed I’d been hacked. Or shadowbanned. Or that some bored teenager with a click bot was bouncing off my ad units like a racquetball. Nope. Turns out I’d just been bouncing off myself, basically.

If you’re seeing things like low CPC with high pageviews, or absurdly high CTR on low-quality pages, check your own browsing habits. I was my #1 visitor, thanks to obsessively reloading blog drafts to check layout, spacing, and whether that sticky nav sucked less. That alone can trigger Google’s auto-flagger if you engage with pages that load ads. Chrome’s DevTools “Disable cache” setting is wonderful, but it doesn’t prevent all auto-refresh clicks from getting logged by AdSense. And yes, they see everything.

The invalid traffic policy operates with maybes and soft rules — actual enforcement often depends more on traffic patterns than definitive proofs. Meaning: you might get flagged for being “suspicious” even if you didn’t break anything outright. A myth I clung to for way too long: “If no one’s click-spamming, I’m good.” Nope.

That Time Cloudflare Almost Made It Worse

Enabling Cloudflare’s Bot Fight Mode seemed logical — more protection = good, right? Turns out, aggressively filtering bots at the edge can result in a weird side effect: inconsistent ad impressions. In my case, AdSense started reporting wildly fluctuating RPMs. Like day-to-day swings of hundreds of percent. I spent three days trying to convince myself it was seasonal variance (in April?).

After disabling Bot Fight Mode, performance normalized in two days. I suspect the issue is tied to cached resources + user-agent overrides that trip up AdSense’s crawler or impression tracking. What’s goofy is Cloudflare doesn’t expose detailed logs for non-enterprise accounts, so it’s really just inference unless you’re packet sniffing directly.

Lesson: if AdSense performance gets weird and you’re running any CDN with security rules that touch headers, disable one thing at a time and wait 48 hours per change. AdSense’s feedback loop is about as fast as a fax sent through pudding.

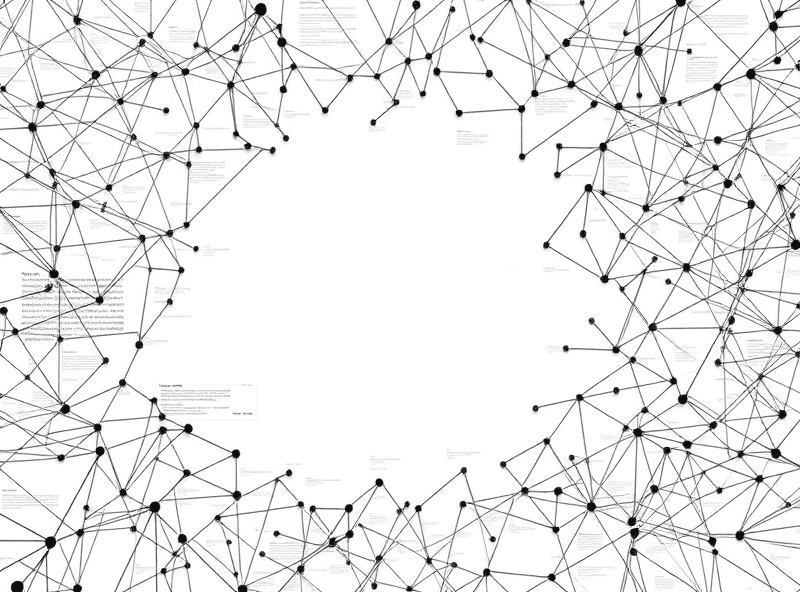

Matching Analytics Traffic to AdSense Reality

This is honestly where the brain damage starts.

Google Analytics (GA4 especially) and AdSense speak different dialects. GA might show 10k sessions, and AdSense will report 6k impressions. It’s not a bug — Avinash Kaushik even alluded to this in his old posts — they count separate things. GA tracks users who load the JS successfully. AdSense counts ad slots rendered from eligible inventory.

Also: blocked 3rd-party cookies, ad blockers, and prerendered AMP views can cause even more divergence. I’ve seen pages with high organic traffic on mobile returning nearly zero matching AdSense impressions. That’s because Safari (especially with iOS Tracking Prevention in full force) won’t properly allow ads to load unless users interact with the page first.

Aha moment: I added a delay of 1 second before ad units render using a simple setTimeout:

setTimeout(function() {

(adsbygoogle = window.adsbygoogle || []).push({});

}, 1000);This actually decreased CTR (since impatient users bounce), but improved RPM slightly by filtering better intent traffic. It’s like adding a bump in the sidewalk to test who really wants to walk this path.

Darwinian Debugging with Auto Ads

Auto Ads are one of those ideas that feels like magic until you realize there’s an entire mystery box attached. I had a site that tanked after enabling them. Turns out, the algorithm picked spots that straight-up broke layout. Picture an ad block rendering BETWEEN a list item and its bullet. Not even visually — in the DOM.

You can override via data-ad-format and fiddle with data-full-width-responsive, but the placement logic lives on Google’s side and changes over time. One day it might run 4 units, next day it runs 1. DevTools won’t help — the logic runs post-load and often uses delayed JS events that are fully obfuscated.

Pro tip: Auto Ads respect responsive meta tags, but only when combined with clearly containerized HTML. Ball of yarn layout using floats or absolute positioning? You’re basically throwing darts in the fog and hoping you don’t miss your own face.

That One Bot From France

I watched a burst of traffic from France hit one of my less-trafficked pages. The kicker? CTR jumped to triple digits. AdSense immediately revoked revenue for that day — claw-back in action. No email, no summary. Just a subtraction and a “why bother asking?” tone.

I started logging IPs (via a separate backend log, not GA) and found a rogue source repeatedly loading and clicking one specific ad type — text only, top banner, non-responsive. Why? Probably testing scraper reactions on auction responses.

Behavioral bug here: Google doesn’t give site owners per-click logs, even anonymized. All you get is aggregate, which makes reaction time laughable. Meanwhile, their invalid traffic detection seems to happen *after* you’ve paid the bandwidth cost.

Since then I added cloud-based WAF rules to rate limit high-frequency IPs by impression timestamp — super hacky, but better than doing nothing. You can’t stop all bad traffic, but at least you can annoy them into choosing someone else.

Do Not Trust Page RPM Without Context

You ever filtered by Page RPM and thought, “Wow, this page is printing money, let’s build five more like it?” Only to later realize those five clones tanked everything else?

Been there. What I missed was that RPM is a performance average — not an exact dollar value from that page. If one user clicked a high-value ad because of genuine interest (and miraculously wasn’t flagged), that’s a spike. RPM will balloon. But that doesn’t mean the layout, content, or keyword was the gold mine — it was the user. Replication fails because AdSense is personalized per user cookie, not per page topic.

Also: when ads render on low-scroll pages, visibility scores drop. That’s a huge part of RPM that nobody explains upfront. Unless you force viewport anchoring or lazy-load content below-the-fold, performance can crater no matter how good the title is.

7 Errors I Still See Devs Make With AdSense

- Copy-pasting ad units across pages without updating slot IDs

- Using one AdSense account across unrelated sites with totally different niches

- Allowing dynamic DOM frameworks (like React SPA routers) to render pages before AdSense knows the URL changed

- Placing more than one ad slot above-the-fold, which can be a TOS violation depending on layout density

- Embedding ad units in iframes from a different domain (hard fail, always)

- Assuming AdSense auto-refreshes units on AJAX navigation (it doesn’t — you must call for a refresh manually)

- Neglecting to set crawlable robots.txt paths for secondary pages where ads are allowed — AdSense can’t serve if it can’t see

Every one of these has cost me money, and sleep.

The Silent Rejection: Ads Not Showing, No Warnings

This one’s the worst. You set up a new page. It loads fine. The ad code’s there. But nothing shows. No “limited ad serving”, no policy reminder. Just blank space where your dreams go to die.

Eventually I realized Google occasionally blacklists content silently — either using Trust & Safety auto-filters or because the crawler couldn’t categorize the content at all. If the surrounding text includes certain banned combinations (even in footers), some ad categories won’t render. In one case, a casual political joke in a comment box triggered a block.

And there is, frustratingly, no way to know without replacing ad code with test units from their publisher tools — which I honestly forgot even existed. Go to your AdSense dashboard, make a new unit, and use the “preview” code with diagnostics turned on. That’s the only way I confirmed the page wasn’t serving due to content classification.