How I Decide Which Low-Performing Pages to Nuke

The difference between dead content and sleeping content

So this time last year I almost deleted a 2,500-word post about WebP image optimization that made me no money, had zero backlinks, and according to GA4, averaged maybe 2 visitors a week (probably both bots). But every time I hit that delete button, something told me to pause. Two months later, after I added one internal link from a newer post, traffic spiked—just a blip, but enough to make me feel slightly less like a clown.

This is the hard part of content pruning: figuring out what’s really dead versus what just never got promoted properly. A low-traffic page doesn’t always mean it’s useless. If it’s tightly scoped, internally linkable, and has unique info—even if no one’s reading it now—I sometimes leave it as a sleeper.

Here’s a sniff test I run now:

- Does the post actually answer a niche question I still believe in?

- Is it over a year old with zero interaction across GA4, GSC, or AdSense?

- Is the crawl status “Discovered – currently not indexed” for months without budging?

- Is it being cannibalized by similar-performing content?

- Can I turn it into a subheading in a more solid post?

I keep a Notion board with color tags for ‘to rework’, ‘to nuke’, ‘merged into X’, and ‘hold for later’. No automation. Just vibes and data.

Pruning pages without accidentally tanking your topic cluster

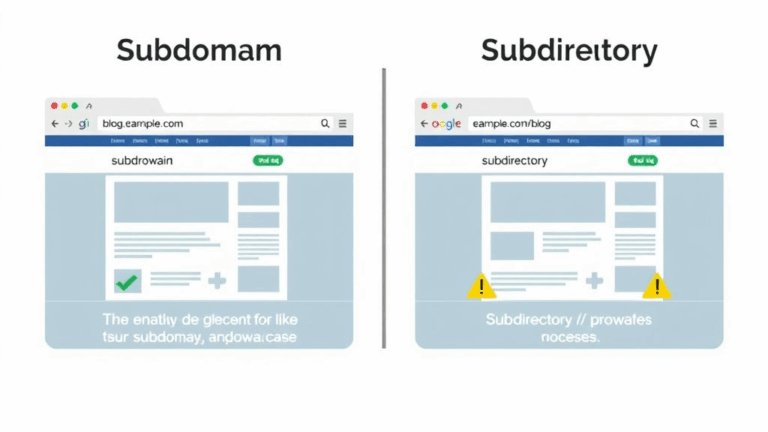

Yeah, this one burned me once. I deleted three low-CTR pages that seemed like filler in a broader set of posts about AdSense site eligibility. Except those “filler” posts were the only internal references to an orphaned post where I explained crawl budget issues with subdomains on Cloudflare. Boom, rankings tanked. I had to dig out an old site backup and Frankenstein parts of the deleted content back into a new post so Google could pick it back up again.

If you care about topical clusters—and you should—do a full internal link graph audit before deleting anything. Stuff that’s not ranking might still be passing context. Broken chains mean Google loses some of your intent story.

Quick check:

- Use Screaming Frog or Sitebulb to export internal link counts

- Flag pages with fewer than 3 inbound internal links

- Map content themes visually (I use Whimsical because I’m a sucker for color-coded chaos)

And don’t rely on Search Console’s UI. The referring pages aren’t exhaustive at all—just the ones Google feels like showing you.

AdSense RPM should not be your only deletion metric

This is where it gets tricky. I used to query my old WordPress database for RPM thresholds. Anything under a certain number was up for deletion or noindex. But that formula breaks the second you hit a niche with latent demand, where CPC spiked last month but traffic didn’t yet. RPM’s a lagging metric—it tells you how a post has performed, not how it could perform.

Real quick example: I had a how-to on sandbox domain policies for AdSense approval that made under five bucks all year. Just for fun, I embedded a demo code snippet and added structured data to the post. About a month later, it showed up as a featured snippet for a weird phrased query, and my RPM tripled.

Now, I still use RPM ranges to prioritize updates. But I warn you: don’t nuke purely on short-term RPM unless you’re running massive content ops and just physically can’t go manual. For individual site owners or micro-teams, it’s too blunt a weapon.

Undocumented edge: removing a page can demote others

Here’s what nobody tells you: pruning a page that links uniquely to something else can demote that target page even if it’s doing fine. There’s this semi-undocumented effect I’ve tested twice now—both times after deleting thin glossary-type pages. I tracked crawl stats and GSC position deltas. The quick summary: Google started crawling the linked-to article less in the weeks after the source was deleted. Not deindexed, just deprioritized.

My theory? Internal link removal acts like a drop in freshness weighting—even if the main page wasn’t changed. Without new entry points or crawl signals, Google just eases off. And if that linked page had weak backlinks, it drifted lower in SERPs despite still being solid, content-wise.

I tried this again with one additional link staying live (from a different post), and the demotion didn’t happen. Signs point to: internal variety matters more than we think.

Soft 404s can tank related URLs when you remove too aggressively

I got a taste of this the hard way. After pruning about 40 pages that were thin or stale, GSC reported a spike in “Soft 404” status for URLs I’d actually redirected properly. But a bunch of those posts had shared slug roots with other still-live pages. Example: I deleted /google-adsense-policy-update, which resulted in a soft 404 report, and then—bizarrely—/google-adsense-policy started dropping in impressions even though it was untouched.

I suspect Google bucketed them together under some similar keyword family, and the loss of one made the other seem less valuable. So now I avoid deleting closely related variants unless I can merge or redirect them somewhere useful.

Also: make sure your redirects don’t land on irrelevant glue pages like contact forms or generic “archived” templates. That’s one fast way to make Google ignore your redirect entirely.

Common mistakes when noindexing instead of deleting

Sometimes you feel smart slapping a noindex tag instead of deleting just in case you need the content again. But GSC doesn’t always reindex the page even after you remove the noindex later. I thought it would be as simple as resubmitting in URL Inspection. Nope. The page goes into this weird limbo where it’s flagged as noindex in GSC’s coverage report but doesn’t refresh unless another major signal (like backlinks or content edit) resets it.

Best case? If you’re marking a set of pages noindex, also remove them from sitemaps temporarily. Then when you’re ready to restore, reverse the noindex and re-add to sitemap. That combo seems to make fetch happen again.

Also, double-check for plugin conflicts. I once had two conflicting SEO plugins—Rank Math and an old meta tag tool—both trying to set indexability at the same time. Caused a batch of pages to stay noindex even after I changed them. View rendered HTML, not just your source.

The weirdly useful signal of internal link decay

I was using Link Whisper (not an ad, just a tool I use when I forget where I put things) to flag broken and dwindling internal links. Turns out, the rate at which a page loses internal links is a better signal for pruning than traffic drop alone. If a page had 15 internal links a year ago, and now it has 3—because related content’s been pruned or updated—it probably isn’t holding its own anymore.

Internal link decay feels like a proxy for content aging, context rot, or even author memory. Google might not see it directly, but it plays into crawl logic. Combine that with GSC coverage status changes (like switching from “Crawled – currently not indexed” to just “Discovered” again), and it’s time to yank it or rewrite.

“If your sitemap still references a URL you mentally abandoned 8 months ago, the algorithm didn’t forget—it just got confused.”

Redirect first, delete later—but not always

The rule of thumb I adopted: if a URL has any backlinks—even if it’s ten years old and irrelevant—don’t delete without a redirect. But if the page really has no juice and no relevance, a hard 404 can sometimes be cleaner. Why? Because repeatedly redirecting garbage to generic pages sends weird quality signals, and over time, that dilute pattern starts affecting index trust.

I asked a colleague who used to work in structured data at a Big Tech org, and his take was: “Redirect loops introduce uncertainty. Google’s fine with 404s for stale URLs—it just wants finality.” Take that for what you will.