Fixing SEO Freefalls Without Nuking Your Site Structure

404s, Broken Redirects, and a Client’s Birthday Cake

About two years ago, I got a panicked message from a client while I was carrying a grocery-store birthday cake to my car. Their brand-new site had tanked from Google’s page one to “maybe page seven if you try really hard.” Turns out their dev team had launched a fancypants redesign and forgot to redirect like… 300+ high-performing URLs.

You can absolutely survive this kind of SEO gut punch, but only if you fix the old URL mapping right. Redirect chains count, yes. But even more deadly are broken internal links pointing to URLs that now throw 404s or redirect two or three hops deep. Googlebot gives up somewhere in that mess, and your index drops like a rock.

“Just 301 everything to the homepage and call it good.” — Dev who caused the SEO fire.

Try not to do that. Use Screaming Frog or Sitebulb to crawl your old site version (hopefully you archived one or cached it…), export URLs above a certain traffic threshold (Google Analytics, if it still had access), and match them to the new structure. Manual work, yes. But salvaging old URLs will bring rankings back faster than anything else.

Canonical Tags That Say “Don’t Index Me, Bro”

This one’s criminally easy to miss in CMS-heavy setups. Especially with ecommerce platforms or anything headless where your devs made custom templates for product variants. You load up a page in Chrome, view source, and boom:

<link rel="canonical" href="https://example.com/" />

Yeah, that’s not the product page you wanted indexed. I literally triple face-palmed when I realized a template misinheritance in Shopify had global canons pointing home across an entire product catalog. Took us a month to recover, and they were livid.

Things to check if your canonical tags are working against you:

- Don’t assume your canonical tags are the same in HTML and rendered DOM — use Web Developer Tools or Lighthouse + headless Chrome

- App integrations (like reviews engines or AMP plugins) sometimes overwrite your canons at runtime

- Localized URLs (

/us/,/uk/) often get canonicalized to the international homepage unless HREFLANG is meticulously handled

Sometimes you just have to grep your templates and hand-audit five or ten product URLs cloned from the same layout. That’s how we found a rogue logic branch that was adding a malformed canonical to blog posts published on weekends. Don’t ask.

Meta Robots Tags That Quietly Block Everything

This bears repeating because it happens in every single audit I do where devs handled a relaunch and SEO was an afterthought. Someone left this in from staging:

<meta name="robots" content="noindex, nofollow">

Google isn’t stupid, but it’s obedient. You say “noindex,” it nopes out. Even if you have a valid sitemap, backlinks, and crawl paths, if this is in the header, Googlebot shrugs and walks.

One weird behavior

We discovered that toggling noindex off doesn’t result in immediate reindexing, even after force-pinging the sitemap via Search Console. You often have to nudge pages back into the index by either:

- Internally linking them from a crawlable/indexed page

- Resharing the URL publicly (Twitter still works!)

- Changing the publication date to give it a freshly updated timestamp

I once went through the hilariously analog act of linking to a freshly-un-noindexed page via a sidebar widget on a high-traffic blog post. Indexed in three days flat. Sometimes silly still works.

Sitemaps That Are Technically Valid but Strategically Useless

Google Search Console will happily say your sitemap index file is perfect, even when the actual URLs inside haven’t been crawled or are just… irrelevant.

Look at your /sitemap.xml. Does it include image attachments? Paginated tag archives? Login-required areas? I once found a 9MB sitemap bloated with WooCommerce attachment pages — and no actual product pages. Search Console gave it a green check. Googlebot decided the whole shop was useless.

You want your sitemaps lean and revenue-oriented. Prioritize pages with meaningful content and internal links. Your sitemap should echo your crawl budget strategy, not your content management baggage.

Plugins That Lie to Your Face

Yoast, RankMath, All in One SEO — I’ve had all three fail silently. One time Yoast introduced a bug that disabled the XML sitemap toggle for all CPTs (Custom Post Types) if a certain setting combo existed. We only caught it because traffic to our podcast pages vanished over a weekend.

Don’t assume the setting is applied just because the checkbox is checked. Always check the live source code, the actual sitemap URL, and — bonus points — use curl to see if the HTTP headers match what the plugin is claiming.

curl -I https://example.com/podcast/sitemap.xml

If you don’t see Content-Type: application/xml or get redirected to a homepage route, the plugin either fumbled or is at war with a caching plugin. Speaking of which:

- W3 Total Cache has randomly cached 404 pages as 200s before

- SiteGround Optimizer sometimes applies compression headers to XML that makes it unreadable to Googlebot

- WP Rocket will silently exclude routes based on regex conflicts if you have custom WooCommerce rules

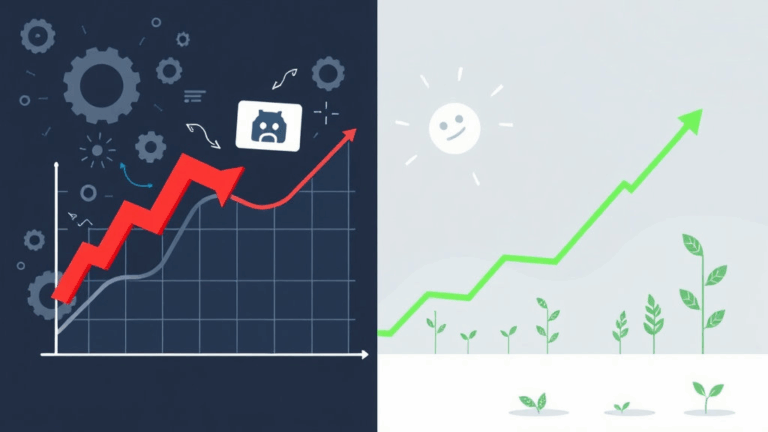

Google Search Console Reports With Misleading Positivity

If everything in GSC says “all good” but your traffic graph looks like the bottom half of a ski slope, it’s likely you’re looking at the wrong report. Most people check “Coverage” and not “Performance,” which is like checking your tire pressure without looking down to see the thing’s flat.

I once had an ecommerce client with zero GSC errors, green sitemap status, and total chaos in rankings. Performance tab showed a 90% drop in impressions for category pages. Why? Their new JS framework was loading category names client-side, and Google saw empty divs. Instant de-optimization.

That led me to my accidental discovery-of-the-week:

“Googlebot renders up to 5 seconds of JS on category pages, but ignores client-side pagination unless it’s directly linked with

<a href="">.”

If your site relies on infinite scroll or hash-routing like /products#page=2, chances are you’ve stranded entire segments of your catalog in unindexable soup. Add real hrefs. Just do it.

Analytics That Gaslight You

Three times now I’ve done audits where GA4 or old-school UA pointed to major UX wins… that turned out to be conversion-destructive.

GA tells you bounce rate on key pages has improved? Cool. Is it because you added a sticky modal that makes it impossible to leave without clicking something? Fake metrics.

In one case, a client added an interstitial carousel that users had to click through just to view the listing — conversions dropped 40%, GA4 claimed engagement up 20%. Why? Because time-on-page and click events spiked. Users were trapped, not engaged.

If your organic traffic is steady but revenue’s dropped off a cliff, segment Analytics by landing page → user behavior → end path. Check if organic landers have new friction injected. Popups, overlays, scroll-triggered hell-menus, whatever. SEO gets them there, but Analytics tells you half of what happens next — only if interpreted carefully.

Edge Case: Pages Indexed Without Ever Being Linked Internally

This one creeped me out: a tiny WordPress blog I ran years ago had a ton of login-protected draft posts start appearing in Bing’s index. Not Google, just Bing. They weren’t in the sitemap, weren’t linked, had noindex… but they had preview URLs created while I was logged in.

Apparently, Bingbot sometimes honors preview links passed through MS Teams or emailed via Outlook’s spam scanner. This is deeply stupid and exploit-prone — and yes, there was a plugin installed that didn’t restrict preview routes properly.

So: sanitize your preview URLs. They should require login or obfuscated hashes at the very least. Dev mode access leaks are rarely considered during SEO audits — but we’re way past the point where bots only do what we tell them to.

React Hydration Failures Killing Indexability

If you’ve switched to React/Next.js and rushed page launches with minimum server-side rendering, congrats — Google probably sees half your content. The pages visually look fine in browser but have a big blank shell in the raw HTML with JS bootstrapping everything.

The kicker? Googlebot loads the page, sees minimal HTML payload, and bails before hydration finishes. GSC says “Indexed, though content is missing.” And that’s not an error — it’s a shrug emoji from the index.

Tell-tale Signs:

- Title and meta description show in SERPs, but no snippet text

- Random pages disappear from index despite being linked

- Performance tab drop-off lines up with JS deployment dates

The shortest fix is to add basic SSR output for top-funnel pages: even just static pre-rendered state for product data. Don’t count on rehydration to save you — Googlebot isn’t waiting. It already left the building.