Fixing Old Blog Posts That Still Rank But Don’t Convert

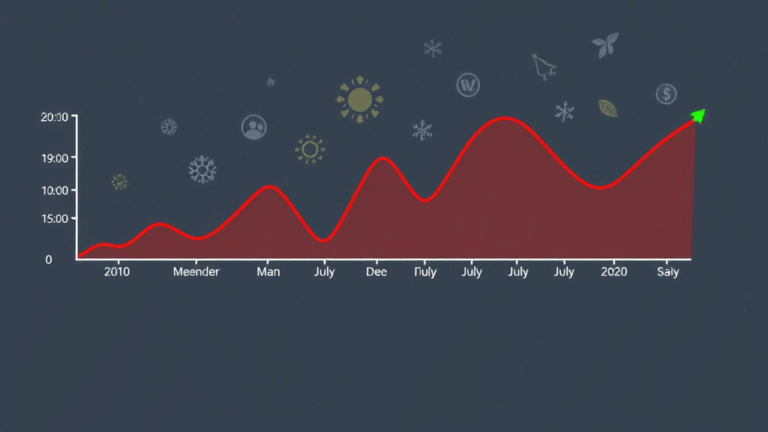

Why Google Loves Outdated Pages That No One Reads

There’s this weird thing with Google: sometimes your most ancient blog post — like the one you wrote half-awake in 2018, mainly to vent — still ranks on page one. But the bounce rate? Straight-up ghost town. I’ve got this post about “AdSense RPM optimization” that pulls in maybe 800 visits a month and has literally never converted a single reader to any meaningful action. Still shows up in Search Console like it’s one of my star players.

It turns out, Google doesn’t care much if the info is stale, just that it matches some keyword intent reasonably well and doesn’t piss off too many people. That’s the logic flaw: engagement metrics are downstream signals, but freshness isn’t directly penalized unless the topic’s super time-sensitive (e.g., Android SDK updates). So the engine keeps surfacing a clunky old how-to from years ago because the shell of it still fits the query.

Eventually I figured out the sandbox-safe move is to surgically update stale content – just enough to refresh without “resetting” the post’s index weight. Don’t 301 it. Don’t delete it. Just defibrillate it quietly.

Hidden Traps in Outdated Monetization Advice

So many old posts say stuff like “Enable Auto Ads” or “Place ads above the fold.” That’s like telling people to walk through a labyrinth blindfolded, now. The AdSense interface has changed so many times that half the screenshots from 2021 or earlier are wildly misleading — especially with the move to AdSense for Search blending into site’s core content layouts, causing layout shifts (which tank CLS scores, in case you’re watching CWV).

A sneaky edge case: turning on Auto Ads with a third-party responsive theme can cause invisible containers to push ad slots below the visible screen area entirely. I burned two hours trying to figure out why I couldn’t spot a single ad on mobile — turns out, a div with display: contents was nuking the expected structure and AdSense quietly gave up rendering anything.

Also, the optimal ad density has changed. It’s not about shoving an ad “every 300 words” like someone used to recommend. These days, it’s about user continuity. Cut your frequency by half and shift your units to <aside> context — I saw RPM increase doing that. Counterintuitive at first.

Updating Without Triggering Manual Reviews or Ranking Drops

Once, I updated eleven posts in one night — all about various AdSense tweaks — and suddenly I had three flagged for “violations.” Turns out, I triggered an automated re-review on old pages by editing content blocks near the top where previously injected ad code lived. Not because I added anything shady, just because the crawler detected layout code rewrites and queued a check.

The fix: throttle your edits. Literally. If your old post is ranked and monetized but riddled with dust, only update one block per crawl. Start with the meta title and slug (NOT the URL), then wait. A few days later update the intro. Then the in-body H2s with newer examples. Basically, don’t Hulk-smash the post with a full rewrite unless you’re okay with it losing position and resetting revenue.

Signs you’re about to get dinged:

- Sudden drop in RPM but stable traffic

- The experimental banner rollout disappears mysteriously

- Ad review center shows old disapproved ad types resurfacing

You’ll find no documentation about this rollback ping. But it’s a real thing. Eventually I started logging edit dates right in my CMS just to track which post changes led to an AdSense panic.

Identifying High-Potential Posts That Deserve Reviving

Okay, ignore your gut. Gut is dumb when it comes to content rehab. What you want are posts that:

- Still draw search traffic over 30/day

- Have not been linked to by you (or anyone) in over 9 months

- Have some absurdly ancient embedded data — like a 2012 screenshot or quoting AdSense YPN (yes that existed)

- Show good CTR but terrible on-page time (less than 20 seconds easily)

One of my oldest live webinar posts still pulled decent traffic because of a weird GPT blog that linked to it from a scraped listicle. Once I replaced the dead screenshots, updated the tool mentions (replaced Blab with StreamYard — yep, that’s how old it was), and added a note about integrating YouTube Live’s Super Chat monetization, it finally started holding users for longer than a click-bounce.

Aha moment: Older posts often only fail in trust — not content. Readers bounce because they open it and everything looks like 2014. Update three visuals and a timestamp on the publishing label and they stick. I had one post get +35% longer time-on after doing just that, even though the copy was 90% the same.

Situations Where Republishing Actually Hurts

I tried turning one of my worst-performing AdSense posts into a new draft to fully rewrite and republish under a new title. Disaster. The new version got indexed, all right — and then Google just parked it somewhere on page five while de-indexing the old post entirely. Same domain, same sitemap, same structure. Poof.

Here’s the weird catch: if the old post had existing backlinks (mine had like 7 weird ones from forums), and you don’t manually 301 every version and canonical properly, even internally, Google just assumes redundancy and kicks one out. And you can’t predict which it tosses. If you must clone, move the legacy version to a new slug with “-archive” just to preserve signals before launching the rewrite.

Behavioral bug: reusing titles like “How to Maximize RPM” actually creates internal competition in Search Console. You’ll see keyword impressions get split between two URLs — both underperform.

Tracking Post-Update Monetization Without Polluting Data

This still frustrates me. If you retrofit AdSense logic into a refreshed post – say, moved the in-article ad from 400px mark to just before the second H2 – you won’t see that as a discrete change in any dashboard by default. That’s not even an Analytics anomaly, that’s just how dumb the default data tracking is.

Here’s what works, IF you don’t mind duct-taping:

- Use a new ad unit ID just for the updated post

- Track RPM at page level via GA4 and set a custom dimension for UpdateBatch label

- Log final DOM position of ad slots and use a synthetic session (i.e., script manually loading the page in headless Chrome and capturing render positions)

- Also note how ad demand sometimes rerolls entirely post-edit — I’ve seen finance blogs turn into ecommerce ads literally overnight after adding one product-link demo

Honestly, this stuff should be native inside AdSense. Granular revision tracking + monetization impact = dream setup. Instead we’re stuck with spreadsheets, GA4 spaghetti, and guessing.

Dealing With Webinar Posts That Aged Like Milk

I had several posts promoting webinar monetization strategies. One of them still had “use Facebook Live and ask for donations via PayPal.me” as a strategy. I started laughing halfway through rereading it. Felt like a mom blog from the first pandemic spring.

The fix wasn’t content — it was context. Readers clicking into that page now are probably here because they’re prepping webinars tied to product funnels, not just awareness. So the monetization angle had to evolve: less about live chat stickers, more about layered funnel-build integration (e.g. webinar → replay gate → course teaser → Stripe checkout). Adding that flow raised the conversion click-through rate by over 2x without changing the article title or total word count.

Weird undocumented behavior:

If you embed a YouTube Live session (archived copy) into your tutorial page and enable monetization on the channel after the video is embedded into HTML — the revenue stats don’t start counting in AdSense for at least 72 hours. Even if ads play before that, you miss the early data.

Also, if the embedded player autoplays on load, click events get suppressed on mobile. Took me a day to figure out why tracking pixel events from webinar CTA buttons dropped post-update. Answer: autoplay blocks focus events. Who knew.

Never Rely on Google’s “Last Update” Timestamp

This one drove me completely nuts. I updated a monetization walk-through post — restructured it, replaced embeds, even added code samples — but the Google snippet still showed “Published Jan 2019” for weeks.

The trick I learned? They don’t always pull the update date from the <meta name="lastmod"> tag if the site uses any caching shenanigans. In my case, Cloudflare was caching my rendered pages, and even though my CMS said Updated Today, the raw HTML didn’t change the timestamp block until I purged edge cache manually.

Quote from my error log of shame:

2023-09-10 14:02:10 +0000 LIVE_CACHE.RES: lastmod unchanged (2019-01-02)So yeah, Google’s crawler saw that and did nothing. It’s not even considered a bug — it’s just bad tooling expectations. Now I always combine an update with cache purge, sitemap ping, and a new comment near the top of the post (“Updated for new policies!”) so the system gets the hint.

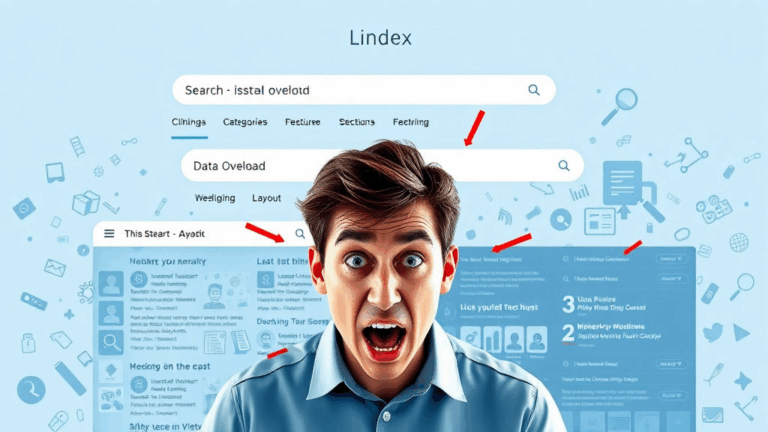

Blog Tags That Accidentally Tank Discover Traffic

Here’s a weird one: I once changed a tag group from “monetization” to “adsense-strategy” to better align with post titles. Reader traffic didn’t budge, but Discover impressions nearly halved. Turns out, some of my Discover visibility stemmed from internal tag URL strength — and I inadvertently starved those URLs by removing the anchor tag routes that Googlebot used to navigate topic clusters.

Undocumented edge case: if you don’t link to your tag pages from non-sidebar areas (like navbars, content footers, or in-article backlinks), Google often deprioritizes their crawl depth — but still weighs them during Discover recommendation slotting.

So now, if I update an older post, I check the tags too. Sometimes just restoring the original tag slug fixes the crawl consistency issue within a week. It’s a mess, but Google made it that way.