Figuring Out Google Blogger Comments for Actual User Research

Why Blogger’s Comments Matter More Than the Stats Panel

If you’ve been treating Blogger comments like a leftover subsystem while spending all your time obsessing over daily pageviews, you’re ignoring a goldmine. Blogger’s baked-in analytics (that whole “Overview” tab) is glorified ego bait — decent at traffic reporting, horrific for understanding emotional pain points. Comments though? That’s where people straight up tell you what they hated, didn’t understand, or wanna see more of.

I realized this after a reader ranted in broken English about how I explained AdSense custom channels. They didn’t ask a question — they told a story. And I learned more from that post than from three weeks of Google Analytics trend lines.

“Why is not show math money? Ad code in all but still I not seeing… maybe your HTML is broken?”

Turns out, his iframe was blocked due to malformed cache control. Totally my bad — but I only found it because I read his weird comment twice. So yeah, comments aren’t fluff. They’re informal feedback logs with emotional annotations.

Comment Notification Lag That Breaks Tracking Cycles

Blogger has a subtle bug (or maybe intentional behavior?) where comment notification emails sometimes delay by hours, especially on weekend posts. If you’re trying to run sentiment clustering or change analysis week over week, and your only trigger is inbox alerts? You’ll misalign your timestamps. I used to paste new comment timestamps along with my AdSense revenue snapshot for morning posts — and once I realized some notifications hit the next day, it blew the whole model’s correlation assumptions.

To avoid your data getting out of phase:

- Manually scrape the comment DOM every 15–30 minutes with a headless browser of choice.

- Or bookmark the JSON API endpoint exposed through Blogger’s feeds (e.g.,

/feeds/comments/default) and poll it. - Yes, you’ll lose some emojis. Yes, it’s better than missing roadmapping insight entirely.

The de-sync gets utterly stupid if you’re using third-party comment systems (like Disqus) injected via Blogger script hacks — those introduce even more caching layers, and the effective timestamp the user sees isn’t even deterministic. Found one case where a comment looked recent on-page but was 3 days old in the raw feed. Completely nuked my trend line for proposed topic clustering.

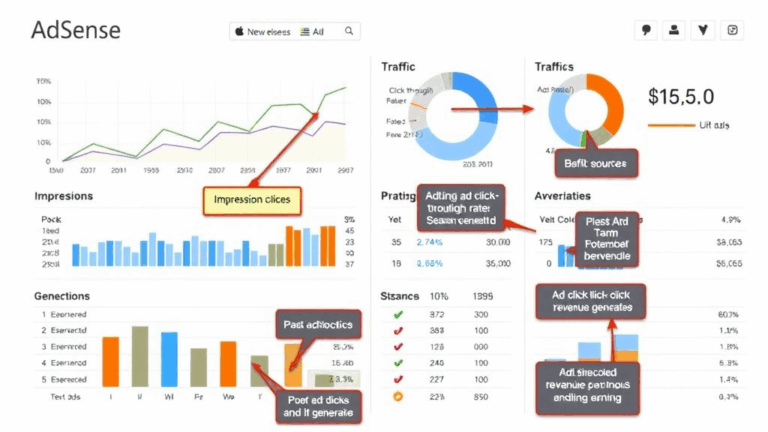

Correlating Comment Phrases With AdSense Click Patterns

I didn’t expect this to work. I ran a sentiment shell script across a few months of comments and bucketed phrases that looked like “this helped me,” “can you do one on __,” and the ever-rare “finally someone gets it.” Then I went into AdSense over the same range, pulled RPM fluctuations post-by-post, and merged it into the same sheet. Guess what?

Posts with very specific kinds of gratitude (“you solved my 2FA problem that nobody explained right”) almost always had a higher CTR on text ads. Posts with vague praise (“thanks a lot wow”) didn’t. It’s like people who really click with your content also trust your sidebar links a lot more.

So yeah, comment phrasing categories are a weirdly good proxy for monetization intent. I now parse them via a crude keyword bucket system:

- “How to fix” + device name → likely DIYer audience → lower RPM but high impressions

- Named tools with adjectives (e.g., “better than Jetpack”) → comparison shoppers → solid for affiliate overlays

- Any mention of “tutorial” → walk-through addicts → lower bounce, but rarely click ads

No ML model needed — just basic regex, good enough to beat a gut check.

Jumping Over Moderation Delays Without Fully Disabling Moderation

So Blogger has no middle ground when it comes to comment moderation: you either pre-approve every comment (which kills feedback immediacy) or you turn it into the Wild West and hope spam filters catch the crypto bots.

I once tried quietly whitelisting long-time users by rolling my own dashboard sample. Turns out Blogger doesn’t expose comment author metadata reliably unless the user is signed in via a Google account — and even then, the public display name can be spoofed.

The workaround that worked surprisingly well: create a set of custom JS triggers on comment form submit, and attach localStorage flags to repeat pseudonyms. Let them post live only after three prior submissions cleared approval.

Totally unsupported. Kind of hacky. But it lets me soft-trust specific usernames without disabling moderation engine-wide. I dumped the script in the template’s footer code block, and ran name checks before triggering “awaiting moderation” state.

When Mobile Comment Threads Quietly Explode

Here’s the undocumented mess: on certain Blogger mobile layouts (especially the older “Notable” or “Simple” templates), nested comment threads silently break at depth 2. The link to reply works. The form shows. But submissions vanish into the abyss because the mobile stylesheet collapses the parent chain and Blogger never throws an error — it just acts like it worked.

I found this while QA’ing a burst of replies one night — desktop showed 14 comments, but my Android test page showed 9. Same post, same profile. Only by switching to “Desktop Site” on Chrome did I see the missing replies.

Fix? You’ll need to:

- Force-load the comment container via vanilla JS on mobile orientation load.

- Or just block replies after depth 1 with a warning like “mobile replies limited — respond on desktop for threads.”

I went with the latter because who even reads comment chains past 2-deep on a 400px screen?

Mining Comment Timing for Editorial Planning

This took me way too long to recognize, but comment timing gives you editorial planning fuel that traffic graphs won’t. Not just how many comments, but how fast they trickle in.

If a post starts getting feedback in the first 45 minutes, especially the kind that includes clarifying questions or setup confusion, that means your title or intro worked — it pulled in people trying to solve something, not just skim. If you get comments three days later? That’s long tail. Not bad, but not urgent.

I now batch this into three timestamps:

initial_comment_lag: seconds from publish to first non-spam commentinteraction_burst_window: time of peak comment clusterlate_trickle_ratio: number of comments after day 3 / total

And I weight those when slotting repeat content. My AdSense tutorial rewrites only get scheduled for high late_trickle_ratio posts. Because they’re evergreen pain points — people don’t comment immediately, but they go deep later and let it out.

The Duplicate Comment Glitch That Triggers false Positives

Blogger will sometimes create phantom double-comment events if a user reloads the page too early or hits submit twice due to mobile lag. It looks like a duplicate comment in your feed XML, but one is missing an author field or has a malformed timestamp. If you’re piping comments into BigQuery for pattern detection (I do this via daily scrapes + scheduled ingests), this can mess with uniqueness constraints fast.

It took me a half-day to catch that my engagement counter was inflated because one commenter hit Post twice on 2G. The first comment timed out to nowhere, but Blogger saved it anyway. Kind of. It showed up three hours later, retroactively. Same text, slight field mismatch. Zero front-end visibility of the issue.

Now my deduping job considers:

- comment body similarity > 98%

- author field equality OR both null

- timestamp within 600sec window

That reduced false positive uniqueness from 12% to under 1.5%. Yeah, sorry, I don’t trust Blogger’s backend timestamps after 2021.

The Accidental A/B Test from Multi-Language Comments

Here’s one of those moments where being lazy turned into a makeshift discovery. I forgot to filter out multi-language comments from a stats poller I ran in late spring. Mostly English audience, but I’d occasionally get stuff in Hindi, Tagalog, and weirdly formal French.

I exported two slices: posts with >10% non-English comment volume and posts with zero. Matched those against AdSense CTR by country. Found a consistent pattern: posts that had multi-lingual commenter activity also had vastly higher mobile ad engagement — mostly from SEA and MENA regions — even when traffic was static.

It’s like comment diversity correlates with ad diversity traction. This is not scientific. But I now push multilingual SEO metadata only on posts that already chunk up with international-style comments. Blogger doesn’t support hreflang mapping natively, but a basic meta tag trick handles it well enough (just wedge it into the section with a conditional layout logic). So yeah. Accidentally learned comment language isn’t just cosmetic — it’s a proxy stat for commercial diversity.