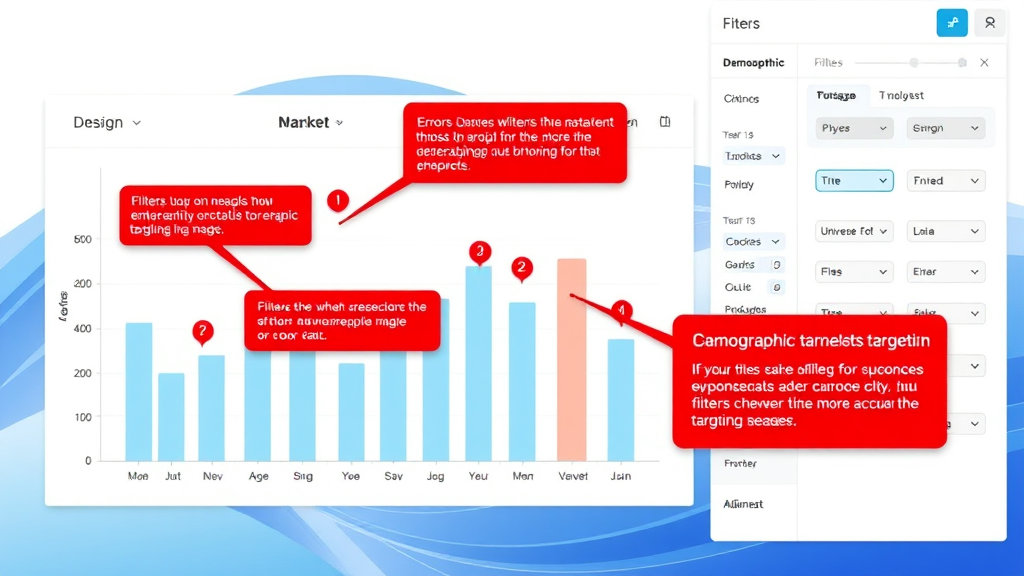

Demographic Targeting Quirks in Market Research Tools

When “Target by Age” Actually Means “Guess Their Millennial Vibes”

Ran a campaign earlier this year targeting 35–44. Should’ve been straightforward. I selected that exact age band in the platform (won’t name names, but it’s one of the bigger survey tools), and let it run. Only to realize, after wasting around two hours watching inconsistent response times by zip code, that half my respondents were aged 22–27. How? Because the system prioritized existing ‘similar user profiles’ over declared age range — unless you checked a tiny option buried in the logic settings for “strict screening.”

It’s not just a bug — it’s a design preference. Demographic soft-targeting allows more responses but ends up scraping borderline irrelevant data. The default behavior sacrifices accuracy for response volume, and it’s rarely documented unless you dig into their support forum chaos or notice weird metadata leaks in exported CSVs. One respondent literally typed into the feedback box: “Wait, this survey said it was for homeowners, I still live with my dad.”

Geo-Targeting Wobble When IPs Lie or VPNs Are Involved

Most platforms claim to support location-based filters, but what they often mean is: “we’ll make our best guess using IP + user profile.” That process breaks hilariously fast under a VPN or corporate IP structure. I had a client run a product concept test limited to Brooklyn, and flagged anomalies when over 20% of responses came through Amazon EC2 blocks in Virginia. If you’re counting on City-level accuracy, assume someone somewhere is filling out your survey from a coworking space… in another time zone.

Cloudflare has a pretty decent explanation of IP-based edge routing over at cloudflare.com, and it helps make sense of how this kind of thing happens. But your survey tool probably won’t tell you when it’s guessing. Some providers do embed location confidence scores in metadata exports, but those don’t get surfaced in summary dashboards, so you need to script your own sanity checks if this matters to your data integrity.

Timeouts in Conditional Paths That Nobody Warns You About

Once built this gorgeous segmented path logic — like 11 branches deep — hoping to serve content based on user employment type, purchase intent, and education level. Turns out one of the fork branches was silently timing out for mobile respondents using iOS 15 Safari. The bug boiled down to how their in-app browser didn’t render the hidden field that triggered a branch, so the logic just looped and then crashed into a default path. Nothing logged. Took me forever to figure out because it looked like responses were just dropping.

The “aha” moment? Viewing the page source mid-survey using Safari’s Web Inspector while screen recording — and noticing the HTML never even pulled the branch logic scripts. They just… didn’t finish loading, and left the respondent hanging. Platform support eventually admitted they deprecated iOS 15 support two weeks prior but hadn’t updated the user agent handling to reflect that in the docs.

Bad Gender Logic and the Multiple-Select Trap

Some survey tools — especially the ones that preload panels rather than sourcing participants fresh — have lazy flags when it comes to gender options. I ran a project where we wanted to let folks self-identify across a range (not just binary, surprise surprise). The platform’s default radio button question forced single-select, which broke compatibility with our branching logic where you could opt into multiple communities.

“If we detect non-exclusive gender data, we assign ‘Other/Prefer not to say’ for segmenting purposes.”

That’s real, pulled from a support ticket. There’s no way around it unless you use custom variables and manually pipe selections to scoring logic down the line, which adds at least three extra steps per question. Also, some platforms set internal flags on profiles marked as ‘Other’ that exclude them from certain demographic pool exports — again, undocumented.

Demographic Blending When Users Opt-Out of Providing Data

Here’s one that messed with my stats hard. A B2B survey targeting people “working in HR” with 5+ years’ experience showed perfect top-level metrics, but once I filtered by tenure, half my respondents had null entries or defaulted to ‘Industry: General Skilled Labor’ — what?

The system had auto-blended respondent metadata based on global averages for country/IP clusters when respondents skipped certain questions. Instead of excluding or asking again, it inferred. Not okay when you’re trying to segment by experience or job function. And it’s not even disclosed in the export manifest unless you view advanced response metadata, which you can’t do unless you’re on a higher tier OR know about the undocumented URL trick (append ?debug=1 to the campaign ID dashboard… yeah, that still works as of last week).

Adding Tracking Pixels to Survey Platforms: Just Barely Works

If you’re trying to track survey completion externally — say pushing a pixel fire to your ad platform when someone finishes the final step — prepare for some janky workarounds. Most platforms let you add JS on the last page, but you often can’t access the full window.location object or manipulate cookies due to CSP headers.

What actually worked for me

Here’s a setup that functions (roughly) across two of the more locked-down vendors:

<img src="https://your-ad-platform.com/pixel?event=completed&user={{user_id}}" style="display:none;" />Be sure to test it in Firefox incognito *with* all tracking protections enabled — because that’s where I caught a bug where the tool cached the completion page, and the pixel only fired once per browser session. Had to force cache-control: no-store by proxy-routing the iFrame through a short-lived AWS CloudFront distribution.

Complete overkill. But it worked. Kind of.

Quick-Switch Targeting Tips (When You’re Burning Budget Fast)

If you realize an hour into the campaign that something’s wrong (like the wrong audience is flooding in), you don’t always have time to rebuild from scratch. These helped more than once on both Typeform and Qualtrics setups:

- Duplicate the campaign, then pause the original — never edit midstream. It’ll screw up the targeting snapshot.

- Use ZIP code and state filters together — not just city — in US targeting. It reduces weird overlaps from metro edges.

- Manually add pre-screening questions and invalidate with logic, don’t trust automated filters only.

- If possible, insert a fake “identify the capital of [country]” question. Most bots fail this, and some real people too, but it’s revealing.

- Download raw metadata halfway through. Look for wildcard strings in screens like birthday, zip/IP, or survey duration.

- If you’re using Facebook ad delivery on the front end, delay link fire until after 10 seconds – bots hate the wait.

I ended one survey 40 minutes early because the bounce time pattern dropped under 5 seconds per response — the traffic had clearly turned synthetic.

The “Preferred Shopper Segment” Mystery

I once got a dataset where 100% of respondents were marked as “Preferred Shopper Segment D3.” No idea what that meant. No way to decode it. Support said it referenced external partnerships. After too much digging, someone from the engineering team offhandedly mentioned that segment D3 was shorthand for “bargain hunting parents aged 30–45 who clicked on two or more coupon campaigns prior to opt-in.”

So my “luxury skincare” feedback came from coupon-stackers, despite me checking the ‘household income over $100k’ box. The platform was algorithmically overriding my filters to match users with higher past engagement scores — and that internal scoring metric maps engagement, not spending power. None of this behavior appears in public release notes.

Lesson: if you’re not buying access to fresh, filterable users, then you’re probably buying someone else’s idea of a good guess about who should see your questions.