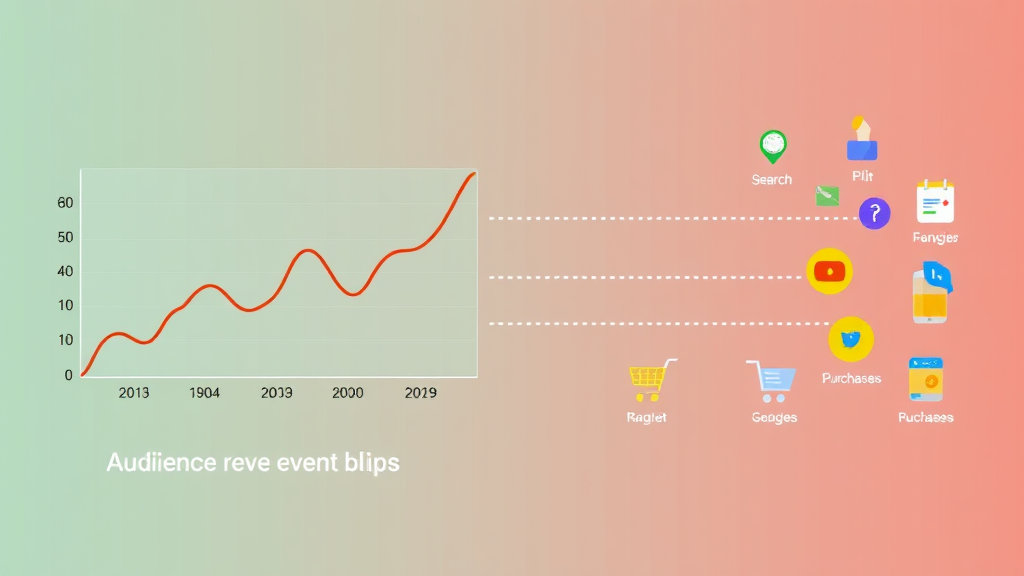

Connecting AdSense Revenue Blips to Actual Audience Behavior

The Irrational Calculus of Ads per Visit

There’s this myth (and I absolutely believed it for maybe a year) that more pageviews = more clicks = profit. Until you log in one day and see your RPM drop like a rock despite traffic holding steady. Cool, so apparently everyone stopped caring between yesterday and today? Not quite.

AdSense doesn’t purely operate on scale—it’s not linear. It’s a messy soup of behavioral reactions, fill rates, auction chaos, and your reader’s mood swings. During May I saw sessions from my small but loyal Reddit thread readers shoot up, but my earnings? Flattened. Turns out: too familiar an audience will develop ad blindness fast, especially if your placements are obvious or repetitive.

“You’re basically overfarming the same pasture. Even the cows get bored of chewing the same grass.”

What I didn’t notice for a long time: engagement drops when I publish about topics THEY care about, but have already discussed in the comments. Doesn’t matter that it’s a new post — if they’ve ranted about it already, they scroll through on autopilot. CTR tanks. Engagement is never just about timing — it’s topic fatigue combined with layout friction.

The Dangerous Comfort of Returning Users

Here’s where I got trapped: I thought high returning user counts meant I was doing something right. But high loyalty can be absolute death for in-page engagement, especially with AdSense. The regulars stop clicking unless something radical shifts. And AdSense doesn’t distinguish loyalty from lethargy.

What’s worse — Responsive ads tend to blend in too well after repeat visits. Users mentally edit them out. So while the auto-optimizing placement tool keeps trying to “help” with better sizes, it actually ends up solidifying ignorable patterns. I had one sidebar unit that always rendered a 300×250 — never changed — and returning viewers never clicked again after week one.

A trick that briefly worked (before it got creepy): I rewrote anyone’s first comment into a blog post title. That got attention—and clicks. Until they caught on. Engagement jumped (CTR spiked maybe 30-ish percent for a few posts), but fell right back when trust eroded. Still, kind of worth it for the data.

Chrome’s DevTools Ads Tab: Underused or Underbaked?

If you’re not using the Ads tab in Chrome DevTools: I didn’t either, until I got desperate enough. It’s hidden under the “More Tools” panel, and it’s supposed to show visibility and performance data per ad request. Sounds great, right?

Yeah except it feels… unfinished. Like Google got halfway through shipping something for media folks and got distracted by five internal reorgs. Sometimes it won’t load. Other times it falsely reports views as unmeasurable even though all ads are clearly visible. But when it does work, it’s useful for spotting alignment issues, like when a sticky footer div overlaps a lower MPU and kills its visibility score silently.

Also: it catches lazy-loaded ads that don’t trigger properly on infinite scroll pages — especially inside React or Vue mounts. Nothing in AdSense’s dashboard tells you those aren’t firing. I only found out because DevTools flagged a bunch of undefined ad slots inside shadow DOM chains. No error — just… no ads.

AdSense Experiments That Go Rogue

Every few months I open up auto experiments again, thinking maybe the algorithm has improved. It hasn’t. Letting AdSense run layout experiments on your behalf is like letting a second cousin rearrange your furniture while you’re asleep. You’ll wake up with chairs taped to the ceiling.

The machine “learns” what your audience likes but never tells you why. Once it swapped my in-content ad for a full-width leaderboard at the top of the article. CTR dropped, but revenue didn’t — and I couldn’t reproduce it manually. That experiment ran for a week, and then auto-reverted when AdSense decided it was a failure. Still not sure how it measured that call.

Stuff They Don’t Tell You:

- Some experiments will prioritize ad load speed over placement. This can lower viewability but pass internally anyway.

- You have almost zero control over individual variations unless you build via Ad Manager, not standard AdSense UI.

- Even after stopping an experiment, changes sometimes persist in the cache for up to a week — especially for logged-in users.

- The “winning” variant isn’t always the highest RPM one – sometimes it wins based on session length + bounce rate instead. Had to triple-check logs to notice this.

Getting granular doesn’t help here. You’re better off running your own A/B content with event listeners and lazy loading toggles, then layering in tracked ad slots manually.

Titles That Spike CTR but Kill Trust

Here’s something no monetization blog tells you: That headline you think is clever? It might be murdering your time-on-page. I ran an A/B where Variant B had a more clickbaity title — something like “This One Mistake Is Killing Your Blog’s CPM.” CTR was higher… like noticeably. But the avg time-on-page collapsed.

You know why? Because readers felt tricked. Content was fine (actually better structured than the control), but expectation mismatch nuked the entire session’s value. They bounced fast, maybe told Google my page was crap indirectly — and next week’s ad fill rate subtly suffered.

It’s unconfirmed, but I’ve got a decent hunch that titles which boost bounce rates also poison your behavioral metrics, which AdSense does consider albeit opaquely. So ironically, gaming your title purely for ‘clicks’ can directly cost you revenue even with those extra hits. CTR isn’t sacred. Session quality matters more than we thought.

Reader Comments as Keyword Alerts

So this one… I didn’t expect. I looked into reader comments (on a migrated Ghost install, which was a whole other problem) and noticed most of my long-tail traffic spikes happened after someone angrily corrected me about a side issue in the comments. Same pattern: off-topic correction —> 3-day delay —> small organic bump from new keyword cluster.

Apparently Google indexes those points sooner if they’re repeated or clicked into via anchor links. Some commenter argued with me about Cumulative Layout Shift vs. First Input Delay. Then, a week later, got traffic specifically for “CLS vs FID impact on AdSense.” I didn’t optimize for that — they did, unintentionally.

If your platform doesn’t render comments server-side, you’re likely missing out. That’s one of those undocumented SEO tailwinds Google doesn’t publish. You don’t control it, but you can affect it by… being wrong. Strategically wrong.

When Segmenting by Source Just Confuses You More

I tried using AdSense’s “Ad balance” and Performance Reports to narrow engagement down by source: Direct, Organic, Referral. Sounds smart yeah? Except the “Direct” source trips up whenever a user comes from Telegram, Discord, or embedded browser views inside Twitter on mobile.

So you think you’re seeing loyal returning traffic — but actually, that’s new audience entering through app stubs. They behave differently. They miss newsletter signup banners. They scroll faster. They often skip the top ad (especially in Facebook’s webview). Segment-level monetization insights become useless if you include those ambient referrers as “Direct.”

Also worth noting: those in-app browsers block more than you’d guess. I had 400 sessions from WeChat — 0 ad impressions. Confirmed via my custom event logger; the iframe didn’t bind properly. No errors, just silence.

The Shady Magic of Micro Polls

I threw a one-question poll into a post header once just to break up the wall of text. No serious intent. Simple radio buttons: “Do you use an ad blocker?”

That post made more in a day than others with higher visits. Why? I think the interactive focus delayed bounce behavior just long enough — maybe half a second — for first ad viewability thresholds to cross. Engagement recorded. Ads counted. Sessions marked valuable. RPM did a weird little hop.

Things I noticed:

- Even if users don’t vote, the poll script preloads interaction listeners that seem to affect layout delay.

- A small but vocal group disable extensions just to participate — giving you a clean session where there wouldn’t have been one.

- If you change poll wording to mirror trending language, even jaded readers slow down to read. That’s enough for higher ad initiation.

“The poll wasn’t for them. It was for AdSense’s sensors.”

It’s exploitation-adjacent, but then again, that’s this whole business model isn’t it?

That Time My RPM Dropped Because I Fixed a Bug

One Friday night I stayed up too long and finally fixed a CSS issue with a margin collision that had been double-stacking <ins> AdSense containers. Felt great — layout was cleaner, less white space waste. But then revenue dropped like a rock.

Apparently, some of my supposed “above the fold” impressions had been benefiting from the double render: an invisible extra ad request was firing (unset height, collapsed) and receiving fill from low-priority inventory. Not visible, not click-friendly — but counted.

By correcting the stack, I improved UX… and unknowingly removed ghost ad loads that made me money. This isn’t something AdSense flags. There’s no log note saying “hey we were filling a div you accidentally had wedged under your nav bar.” Just… less revenue and your analytics don’t tell you why.

Fixed code, perfectly clean. Lower RPM. You wouldn’t find that from any audit tool unless you knew exactly what to grep for.