Building SEO-Friendly URLs Without Breaking Your Stack

Why slugs matter more than most people think

People obsess over keywords in titles, H1s, maybe meta descriptions if they’re feeling optimistic. But URL slugs? Often an afterthought. I’ve had clients with URLs like /page.aspx?id=873841 still wondering why their bounce rate looks like a ski jump chart. That kind of structure tells zero to Google and even less to a person.

Forget folk wisdom — Google has straight-up confirmed multiple times that words in URLs help. Short URLs with readable words (ideally 2–5) tend to get more clicks in the search results. And yeah, the URL doesn’t outrank your content or backlinks, but I’ve fixed URL structures on stale blog archives and watched traffic tick up within weeks. That’s not correlation — that’s basic crawl optimization.

Don’t include the date unless you really need to

I used to publish blog posts with /2022/11/15/title-of-post because WordPress defaulted to that, and I didn’t want to fight the permalinks config during launch. Bad call. Even if the content aged well, that URL screamed “expired” to any user who glanced at it. And Google doesn’t love stale timestamps either.

Unless you’re running something where chronology matters (newsrooms, financial sites, that one friend whose blog is mostly open letters to his exes), drop the date. You can always keep a published date in the post metadata or schema markup instead — no need to bake it into the link forever.

“URLs are supposed to be permanent. Don’t lock ephemeral data into them.”

The hidden chaos of automatic slug generation

Most CMSs will auto-generate slugs from the title. Great until you end up with Unicode garbage, weird dashes, or a dozen hyphenated filler words. I once had a Hugo build fail silently because a slug had smart quotes in it — looked fine in the editor, but the output URL choked on rendering. Took me 45 minutes and a diff session to even spot it.

Here are a few landmines:

- Redundant stopwords — e.g.,

/the-ultimate-guide-to-the-best-of-the-top - Stripped trailing numbers — versioned posts like

/feature-v2silently became/feature-v - CMS insertions like

-2when slugs conflict — had one where/postturned into/post-7

Manually editing your slug might feel like peeing on a fire hydrant just to mark it, but do it anyway.

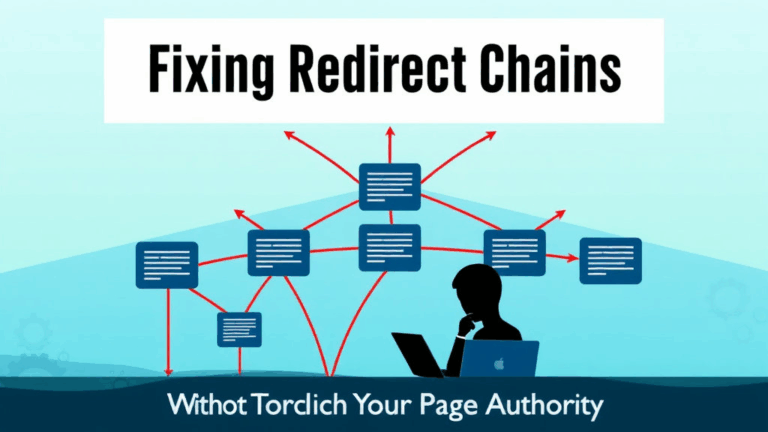

Redirect chains will quietly ruin your SEO

This one’s not immediately visible unless you’re looking at crawl logs or Lighthouse reports, but oh boy does it matter. If you’ve changed your slug structure more than once, you’re likely sitting on a stack of 301 hopscotch that’s bleeding crawl budget.

Cloudflare logs tipped me off one time — a single product URL was being counted as 4 distinct requests due to redirects: /products/old-name → /products/new-name → /store/new-name → /shop/product-name. All 301s, all technically fine, but you only get so many free redirect passes before bots treat it as a soft timeout.

Quick check:

curl -I https://yourdomain.com/page-you-care-aboutIf the headers spit out more than one 301 or 302 before hitting a 200, clean it up.

Slashes, trailing and otherwise, are not harmless

Believe it or not, /blog and /blog/ are not the same URL, depending on how strict your server config is. Apache, NGINX, and even some CDNs treat them differently. And worse, search engines sometimes do too.

One case screwed me with multilingual pages. Every locale had a different suffix structure, and CDN cache keys were based literally on the full URL. So /es/blog and /es/blog/ cached separately, even if they resolved to the same rendered page. Googlebot saw both. Traffic split. Ranking muffled. Took a month of log monitoring to catch it — Google’s crawl behavior showed both paths getting indexed because internal links weren’t consistent.

You can force a trailing slash policy, but you’ve got to pick a team and stick with it. Redirect the other version hard, don’t just let both float.

The case for keeping URLs short even when nobody sees them

Sure, most people are tapping on push notifications or syndication links these days, which means they rarely look at the URL. But that doesn’t mean you get to start acting like your slugs can include your entire internal monologue.

I had a dev once commit a post with a slug that went /how-to-improve-performance-when-your-javascript-is-slow-because-you-didnt-use-debounce. It was descriptive, sure, but looked like a StackOverflow title glued onto a page path.

Search engines still parse these things. And shortened URLs get more shares. Also, every time you embed that link in something else — email, Slack, not even social — the slug becomes a point of user trust or confusion. No clue? No click.

Canonical tags won’t save your sloppily duplicated URLs

A lot of SEO decks love parroting “just use rel=canonical” when you’ve got duplicate paths. That’s fine in theory, but in practice — surprise — Google doesn’t always honor it. Canonical is a hint, not a command. And if your internal links contradict it, the bot picks what it wants.

Had a Shopify store where faceted navigation created infinite slug permutations: /shirts?color=blue, /shirts?sort=popular&color=blue, /shirts?page=2&color=blue. All pointed to the same canonical /shirts, but Google still indexed dozens. Why? Because the sitemap and internal nav used the longer ones, and the canonical lived only in a tag near the end of the HTML body. Lazy parsing wins again.

The fix wasn’t just slapping a canonical tag — we had to noindex bad versions, rewrite nav links, and tighten up parameter handling via Search Console.

Don’t structure URLs around marketing fads

You don’t want to be the person who has to 301 every URL from /ai-tools-2023 to /automation-tools eighteen months later. I’ve had to do exactly that after a client found themselves with 300+ URLs mentioning “crypto” in the slug — suddenly unwelcome after their quiet pivot to “Web3 adjacent” whatever-that-meant.

Trend-chasing in your URL structure just leads to expensive refactors. Your slugs are supposed to be the most stable part of your stack, not high-velocity content scraps. Stick to concepts, verticals, or product names you’d be comfortable seeing six years from now.

CDNs and caching behaviors break when URLs aren’t consistent

If you’re using Cloudflare, Fastly, or any serious CDN, every unique URL path is a separate cache key. Cool. Until marketing renames a slug to be “more exciting” and traffic tanks because the CDN now sees it as a cold request.

One time I thought our React front-end was overloaded. Turns out the CDN wasn’t caching anything because our blog builder would regenerate slugs every time the H1 changed a word. The URLs were technically different, the origin was getting hammered, and Googlebot was seeing dozens of fractured pages with low coherence.

Fix was blunt but effective: lock down slug generation for published URLs, freeze by page ID, and build a redirect layer that tracks previous versions per ID — not per content string. Saved us like 80% in origin traffic once it started working.