Building SEO Dashboards That Survive GA4’s Weird Defaults

Picking Your GA4 Dimensions Before It’s Too Late

GA4 doesn’t think the same way UA did, particularly when it comes to how dimensions and metrics play together. If you’re pulling SEO performance data based on landing pages, user acquisition, or even query strings, you’ve gotta define those dimensions during the event collection phase. Late binding them for dashboarding doesn’t always work—you’ll run into the classic “this dimension and metric can’t be paired” error more than once.

One time, I tried building a client-facing dashboard showing organic traffic per blog category. Turns out GA4 doesn’t actually expose category unless you’re capturing it via custom parameters or page path parsing. Not even available in default GA4 page reports. Had to fall back on a Looker Studio regex band-aid that barely held. Don’t recommend.

“The dimensions available in Explorations aren’t guaranteed to behave the same way in Data API pulls.” I heard that from a Google Analytics Solutions Engineer off the record during a call once. They were not wrong.

GA4 vs Looker Studio Field Names Will Break Your Brain Eventually

Looker Studio makes you feel like you’re losing your mind the first time you try to filter by medium = organic, and it just… doesn’t match anything. Then you realize that GA4 names it “Session source / medium” as one field, not two. And depending on how you’re ingesting the dataset, it gets tokenized differently. No clean mapping table I’ve found covers all cases.

If you’re using the native GA4 connector, try doing a calculated field like:

REGEXP_EXTRACT(Session source / medium, "/(.*)$")That one little expression saved me hours down the road when trying to segment organic campaigns from referral garbage.

Also, Looker Studio has this delightful bug where if you rename a blended field mid-workflow, it’ll just silently unhook it from the charts and not tell you. So you think the charts are loading stale data—it’s actually just dead connections from renamed fields. Good stuff.

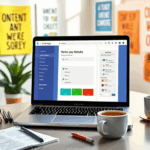

Client-Friendly Widgets That Actually Survive the Month

Not every client cares about impressions versus indexed pages versus crawl stats. But they do freak when bounce rate (now: engagement rate’s awkward cousin) dips by 3 points and no one proactively explained why. So I ended up building a bare-minimum survival dashboard that sits on top of GA4 and Search Console merged via a BigQuery cron task.

- Top landing pages by engagement time (filtered for medium = organic)

- CTR per query keyword, direct from Search Console, merged to GA4 session count

- Last 30 days vs previous 30 trendline for indexed pages (via Screaming Frog API)

- Average load time (sourced from Chrome UX Report, tucked in via BigQuery)

- Real delta in user country—flagging unexpected surges outside the main market

- Technical SEO issues pulled from Sitebulb exports attached as download buttons

Nothing fancy, but the instinct is to over-design when all they want is: “why is this down” and “do I need to care?”.

Page Path Shenanigans in GA4 Reporting

GA4 chopped up the old Page dimension into Page path, Page title, and a few hybrid edge cases that sometimes get treated as events. If your CMS injects UTM-like params into the path for variant testing—like /blog/post-about-dashboards?version=b—GA4 flattens some of these in standard reports but preserves the full thing in Explorations or BigQuery exports.

The inconsistent behavior: in GA4 UI you’ll see them coalesced. In BigQuery exports, they’re separate rows. This matters if you’re doing page-level SEO analysis on split test content. I had to write a deduplication query with a window function over normalized paths. Felt overkill for what should’ve been “show me page views per article”.

Ah, and if Auto-Tagging is turned on in Google Ads, GA4 sometimes logs those gclid params right into the stored page path. Which… permanently pollutes the report unless you rewrite on ingest.

Building a Stable GA4 x Search Console Merge (Yes, It Sucks)

Search Console data is sampled. GA4 data is sampled. But the taste of this soup gets worse when you try to align their time granularity. GA4 respects timezone-based days. Search Console always gives you UTC-day data. Shift happens. And it hits hardest when you’re trying to compare SEO traffic growth from week to week on a regional campaign.

The approach that worked best (read: least wrong):

- Export both to BigQuery on a scheduled basis.

- Create a rolling 32-hour buffer so UTC-based data is matched to GA4’s timezone operations.

- Do key joins only on canonical URLs, stripped of protocol and trailing slashes (yes that one bit me once).

- Don’t try to match Clicks from GSC to Sessions from GA4. Just trend them separately and annotate big divergences.

One wild thing I noticed: a client’s product pages kept showing fewer impressions in GSC than blog pages with far lower rankings. Turned out many of the product pages were behind an interstitial UX element that fired a client-side redirect after a few ms—Googlebot hated it and dropped indexing frequency.

Making Custom Metrics Work Without Explaining Data Layers

You can create derived SEO metrics like Revenue per Indexable Visit—but unless you’re already feeding ecommerce events cleanly into GA4 (lol), you’re gonna end up with half your metrics being null for like a third of traffic sources. Also, GA4’s calculated metrics suck if you’re expecting cross-chart portability.

I ended up tricking it with a dual data source setup in Looker Studio. Pull the revenue metrics from imported ecommerce events, join it on user pseudo_id via BigQuery, and attach that back in as a dimension. Not technically supported as a live blend, but if you cache the merged table as a View, Looker Studio doesn’t complain.

I spent an entire Thursday yelling at the screen because my Revenue / Organic Visit chart showed zero across the board—turns out GA4 event parameters and user properties don’t persist across some automatically grouped sessions. Just gone. No warning. No fallback. Had to stitch it from raw event logs manually.

Preventing Client Panic With Broken Chart Detection

The easiest way to lose client trust is to let a dashboard stay broken for more than 24 hours. I now run a dummy-headless browser that screenshots the Looker Studio view twice a day, diffs it against a prior saved image, and pings me on Slack if charts missing entirely are detected based on pixel delta. Ridiculous? Yup. Effective? Also yup.

There’s one GA4 behavior that gets missed: service account tokens for BigQuery can expire silently if a project-level role changes higher up the IAM chain. No log, no notice. Just no data in Looker. If that coincides with a client review call, enjoy the awkward silence.

I now run this hacky script weekly:

gcloud iam service-accounts keys list --iam-account [svc]@[project].iam.gserviceaccount.comIf there are fewer than two active keys, or one is older than 90 days, I rotate manually and re-auth Looker Studio. It’s not elegant, but neither is explaining production failure because of security policy drift.

Getting Query Keywords Into Your Dashboard Without Begging for Search Console API

Some clients don’t want to give you Search Console API access. Or they have 5 properties split across subdomains and can’t even remember which covers what. What’s odd is that GA4 does let you capture site search queries—if you configure it as a custom parameter on page view or search events. But it won’t capture external search keywords organically.

But here’s what tripped me up: when I did wrangle GSC into BigQuery, I realized that query strings with non-breaking spaces REST-style encoded got silently dropped. So you’d see “how%20to%20optimize” in some rows, but “how 0to optimize” in others. ASCII decoding failed in standard SQL queries unless you added:

REPLACE(REPLACE(query, 'u00a0', ' '), 'u200b', '')That’s the stuff that never makes it into the docs but will tank any keyword-rollup chart you try to ship.