Avoiding the Pitfalls of Google AdSense Site Navigation Errors

When Google Marks Your Navigation as Inadequate

I got slapped with the “site navigation” policy violation once because I thought a hamburger menu and a back link in the footer was enough. Google disagreed. Fast-forward two hours of combing through their maddeningly vague explanation, and I realized they’re not talking about navigation in a design sense—they’re talking crawlable, click-accessible, predictable pathways. If your layout feels like a choose-your-own-adventure maze, that’s a problem.

What’s worse, their bot sometimes flags single-page apps or lazy-loaded sections as non-navigable. Even if users can literally navigate them just fine client-side, that JavaScript loading delay can make AdSense think the user experience is broken. Their crawler doesn’t wait for your dropdowns to populate.

“We weren’t able to find your site’s main content.” —actual quote from a policy violation email where the entire homepage was built as a React shell loading chunks after hydration.

Header and Footer Links Actually Matter

I once thought sticky headers were enough. Turns out, AdSense (and to an extent, Google Search) really wants a clear set of visible links up top and bottom. Keyword there: visible.

- Sticky navs that load after interaction? Nope.

- Expandable footer menus that collapse on mobile by default? Risky.

- Anchor tags with

display: none? You’re just taunting them.

If your nav and footer don’t expose key sections of your site (Homepage, About, Contact, Privacy, etc.) in plain sight, get ready for warnings. URLs need to be reachable through clickable links, not just via sitemap.xml or internal crawling logic.

Internal Linking That Actually Does Something

Had a client build a gorgeous landing page funnel. Every section used buttons that triggered modals instead of linking to discrete URLs. Organic CTR tanked, AdSense mailed them a “Navigational structure” alert, and we spent 3 hours undoing the AJAX madness.

Something kind of dumb but important: the AdSense crawler follows links. Like, legit <a href="/product"> tags. If you build your entire user flow inside VueRouter with no real link tags, the crawler considers your site too shallow. The only way to convince it otherwise? Give it breadcrumbs.

That’s right—good old-fashioned internal anchoring. If a route isn’t discoverable from another static page, it may as well not exist. In one case, adding a basic subnav to blog pages that linked to each other fixed the penalty in two re-reviews.

404s on Click = Instant Violation

I had this frustrating case where a nested mobile menu was pulling a bad relative path when loaded inside AMP view. On the actual site, it worked fine. But because AMP wrapped the page in a different DOM origin, links like href="../../about" broke monumentally. Guess what that smells like to AdSense? A broken site.

The platform doesn’t distinguish between appearance and function. A beautiful React component clicking to a dead endpoint still counts as broken nav. And they’ll flag your entire site for it. Forget elegant degradation—build like it’s 2007 HTML unless you want to gamble with re-application.

The behavior that finally convinced me: I hardcoded one link to /contact in the footer and got a warning cleared, but later a URL with a #contact fragment failed. Same flow, different anchor technique. They don’t parse fragments. Simple as that.

Single-Page Apps: Good Luck Without SSR

No joke, one of the more nightmarish debugging weeks I had was with a Nuxt-based ecommerce site running static builds. Worked fine for everyone except AdSense. The logs were clean. The site was fast. But Google’s crawler wasn’t patient.

SSR vs CSR — It’s Not Optional

If you’re using something like Gatsby or Next, don’t assume that your routes converted during build will suffice. You need to check what the raw HTML dump looks like pre-hydration. If your <body> just spits out a spinner and loads in later, AdSense flags it as low-content or broken nav.

We did a diff test using curl to fetch the raw HTML of a page and compared it to DevTools. The actual content was injected after hydration. Guess what AdSense saw? A blank shell.

Eventually, I injected no-JS fallback text into key navigation points (e.g. “If this page doesn’t load in a few seconds, click here”) and wrapped URLs in true anchor tags. Janky, yes. But the warning disappeared inside of a week.

Misusing Ads in Navigational Spots

This one still ticks me off. I had auto-ads turned on and they somehow muscled their way into the main menu bar. Looked fine on desktop, but on mobile, the ad overtook the menu—users had to scroll past a regional Cialis campaign just to tap “Blog.” Nothing in the dashboard warned me this would happen.

Result: “Deceptive placement of ad units within navigational elements.” Which, okay, makes sense, but good grief, who thought injecting leaderboard banners above dropdowns was fine? Disabling auto-ads fixed it, but we had to manually relayout every page with proper ad padding above footers and below hero sections.

Redirect Chains and Phantom Routes

You ever set a redirect chain via a CDN config and just forget about it? I did. Had Cloudflare Workers soft-redirecting /dashboard to /app to /app/home. Users got there fine. But AdSense choked. It flagged the redirect chain as a “looping navigation path” even though it wasn’t looping—it just lost track.

Turns out Googlebot and its AdSense cousin have a limited number of redirect hops they’ll tolerate. Three seems to be the fuzzy edge. Go past it, and your target route could just drop dead in the crawler’s view of the DOM.

Got smart and flattened the whole thing with a meta-refresh backup and canonical tags pointing to /app/home. That chain flattened, the error went away, and users actually got there milliseconds faster. Score one for SEO, too.

Browser Extension Interference Warnings

This one took a while to even conceptualize. Certain ad blockers and privacy extensions (hi, uBlock Origin) can mess up how your nav is rendered—more importantly, how it looks to AdSense simulation environments. I once had an earnings dip and found that the navbar was totally hidden in Chrome’s headless user-agents with certain local storage values.

We ran headless Puppeteer sessions simulating AdSense’s crawler bot (user agent: Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5 Build/MMB29V) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0 Mobile Safari/537.36) and routinely saw divs hidden based on media query mismatches.

The fix? Code defensively. Always have explicit fallback styling in non-JS contexts—and stop trusting client-side conditions for rendering nav menus. And test with extensions off. Way off.

When the Sitemap Lies

I once had a lovely automated crawler script that updated a sitemap.xml every hour. Cool in theory. But when link rot set in and I had stale URLs, it created a silent disaster. AdSense considered them “linked but not reachable”—which in their terms = forbidden UX.

They do not care that it was a dynamic 404. If your sitemap links to it, and it returns a blank or half-resolved shell, you just handed the bot a loaded weapon.

Now, I manually audit sitemaps before reapplying for anything. I even found some rare routes that returned 200 OK but no body. Ghost pages. They looked fine in analytics, but failed every policy check when audited.

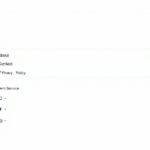

Failsafe: Minimal Dummy Page for Testing

Here’s the thing I now do every time before launching a new navigation setup: build a dead-simple page with pure HTML links to every core route. No framework wrapping. No layout components. Just this:

<ul>

<li><a href="/about">About</a></li>

<li><a href="/contact">Contact</a></li>

<li><a href="/shop/products">Products</a></li>

<li><a href="/blog">Blog</a></li>

</ul>

Then I check that page with Google’s URL Inspection, Lighthouse, and via page fetch in the AdSense interface if available. Half the time, this dummy page passes when the real SPA doesn’t. It’s embarrassing how lazy these bots are.