Accurately Tracking Keyword Rankings Without Losing Sanity

Understanding the Illusion of “Average Position”

First, let’s agree that the “average position” metric—whether from Google Search Console, SEMrush, or whatever fairy dust-laden dashboard you happen to be staring into—is one of the most misleading numbers in existence. It’s the kind of statistic that looks helpful until you realize it’s built on sand.

Search Console buries this average in blended impressions across wildly different locations, devices, and even SERP features. A page can show up once in position 1 and nine times buried under FAQs in position 42 and you’ll still get something like “average position: 18″—which isn’t a thing that actually happened.

I once had a page that was ranking #1 for a localized query when searched from a coffee shop’s flaky Wi-Fi, but showed average position 27 in Search Console for that keyword. I FTP’d into the server thinking the cache broke or something. Nope—just how GSC smears data to death.

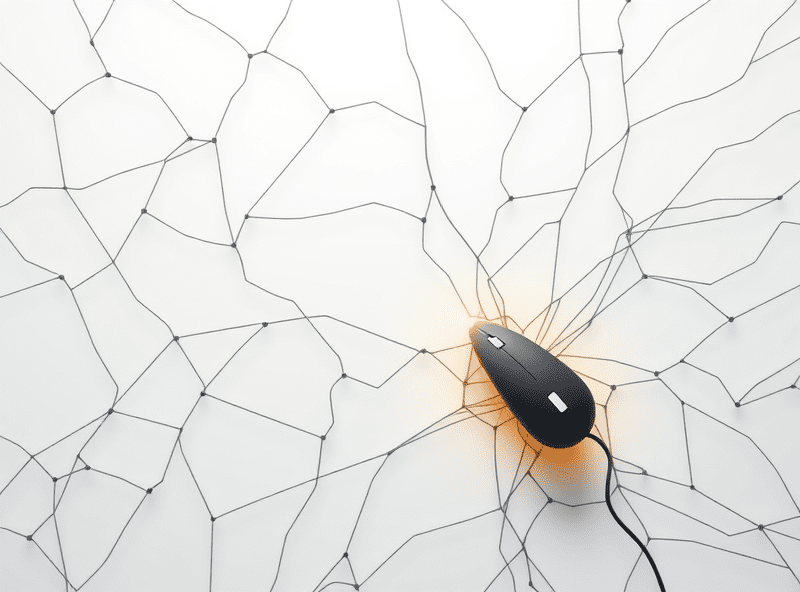

Pick Your Tools, But Don’t Trust Their Defaults

Keyword tracking tools cheat behind the scenes. This is the dirty little truth vendors don’t plaster on their demo pages. Rank Tracker, Ubersuggest, SE Ranking—take your pick, they all use different scraping windows (some mimic mobile Chrome on Android, some pretend to be vanilla desktop), and they almost never clarify geolocation override behavior unless you dig through their forums or DM support.

BrightLocal is honest about location-specific snapshots, but most others just give you the ranking as seen from whatever proxy IP pulled the result. So you might be tracking the rank of “best HVAC repair” from a server housed in Ohio… even if your business is in Portland.

The workaround I landed on? Running parallel tests through both mobile and desktop, with GeoShift or a custom gl= + hl= parameter string in a headless browser (I use Puppeteer). You’d be stunned how often all three return different ranks. It’s not inconsistency—it’s just how specific Google’s personalization has gotten.

The Mobile/Desktop Split is Wider Than You Think

A very real undocumented issue: Google sometimes shows fewer organic results on mobile than desktop for the same query, even before featured snippets or ads kick in. I’ve seen plenty SERPs where result #7 on desktop just vanishes entirely on mobile, not even demoted—gone.

This screws your tracking if your tool doesn’t separate device types—and many don’t by default. Search Console does segment this, but again, it parrots averages. There’s no real substitute for setting up your own device-emulating headless sweeps if you care about precise positioning.

There was this one week where conversion rates dropped inexplicably on mobile and bounce rate exploded. Organic keyword ranking hadn’t changed—according to “the tools.” When I ran simulated mobile SERPs manually, turns out our core page dropped off page one entirely, thanks to the search intent getting hijacked by a carousel of short videos Google decided to promote that week.

Parsing SERP Features is a Whole Separate Hell

Oh man, if you’ve never tried to programmatically detect whether your page is actually ranking in the featured snippet or just begrudgingly included under a ‘People also ask’ accordion, I envy your peace. There’s nothing built-in to APIs like Ahrefs or Moz that consistently tags this. You either pay for structured SERP scraping (DataForSEO kinda works) or roll your own with XPath and pain.

Also, Google keeps refactoring the RGX patterns in the markup for these features, so every few months I have to re-tune my parser like a jazz saxophonist with a pager. No warning. No changelogs. I usually discover it when a Python regex fails silently and suddenly I’m tracking zero snippets across 50 terms. Cool.

A quote I keep taped to my monitor: “You’re technically still ranking #1, but what if Google erased you from attention entirely?”

Keyword Cannibalization Breaks Tracking Logic

When you’ve got five landing pages trying to rank for the same set of mid-tail terms, you’re not ranking five times—you’re confusing Google. This gets even weirder when your tracker doesn’t distinguish which URL is ranking. Many just tell you the rank, not the asset.

So you might be celebrating an improved position for “how long does epoxy take to dry” thinking your epoxy guide is climbing… but GSC will show the rank bump was for the e-commerce detail page instead. Wrong page. Lower conversions. More bounce. Nobody wins.

This is where stripping down content fields across blogs and product listings becomes crucial. Short fix: Add individual URLs for each keyword in the tracker tool and force-capture exact-match associations. Or tag each landing page with an internal UTM variant solely for rank tracking purposes—yes, it’s hacky, yes, it works.

Timezone Mismatches & Ranking Data Drift

If your tracker isn’t syncing its scans to your local time (or the time your Analytics data is set to), you’ll get phantom shifts in ranking that don’t align to traffic or impressions. This is rarely discussed but extremely relevant if you’re troubleshooting a high-stakes serp movement “on Monday morning” that the tracker shows as happening Sunday night—different calendar date, different reporting window, absolute chaos.

This happened to me when I was cross-referencing a keyword drop with a sudden AdSense CTR spike. The click dev looked like it lined up to a new SERP position at 8am—but the tracker had run two hours prior due to timezone offset in a different data center. For two full days I blamed an AdSense experiment that hadn’t even triggered yet. Total red herring. Always align your platform and tracker to the same UTC or go mad.

Google’s Obsession With Personalization Kills Consistency

Every result is now partly bespoke. Logged-in users see recommendations. Logged-out users still get IP-based skew. Private browsing doesn’t reset your scores—only a clean incognito window helps, but even then, things like location, recent traffic trends, and even mobile device type can flavor the SERP.

This used to be manageable. Now, it’s a full-time job invalidating your own tests. A keyword that ranks #3 for you might be #6 for someone one city over. Or #1 if your server location lines up with Google’s fetish for hyper-local pickup formats.

Tracking tools can’t save you here. You either:

- Buy or build a pool of rotating proxies with city-specific targeting

- Log and compare SERPs from anonymized browsers across multiple VPN endpoints

- Add network jitter scripts to simulate real folks (risky but effective)

- Track behavioral data (clicks/impressions/CTR) instead of position alone

- Use search results API snapshots and build your own consensus averages

Once I started comparing VPN-routed desktop SERPs from five cities simultaneously, I stopped sending angry emails to our link builders. They weren’t failing—the SERPs themselves just disagreed which page was “#1” that week.

GSC’s 16-Month Data Cap Will Bite You Later

Nobody tells you this until it’s too late, but Google Search Console locks you into a 16-month rolling window and there’s zero historical export unless you pip install a script to scrape it incrementally. If you want ANY keyword-level trends older than that, you better be warehousing that stuff yourself.

We lost six months of critical seasonal keyword movements because I fully trusted the API to let us pull retroactively in January. Nope. You get the last 16 months, period. It’s basically the cookie expiration of regret.

The workaround I’ve landed on (besides painful cronjobs and GSC API gymnastics): dump all core query+page+CTR combos into BigQuery every 30 days, with an exact timestamp. Cloudflare + GSC makes auth token refresh a little flaky, so keep a manual trigger just in case your cloud function decides to nap for 3 hours—happened to me during Black Friday week.

Poor URL Parameter Handling Still Wrecks Report Accuracy

A final bugbear: Google Search Console still occasionally groups ranking data by default canonical preferences, not the actual URL the click landed on—especially if utm=, fbclid=, or custom params are appended. Those clicks often don’t register correctly unless URL Parameters are flagged in Search Console setup.

This is brutal for campaign LPs or dynamic ecommerce filters. Last year I noticed clicks disappearing from a well-performing recipe page we’d just linked to via a summer email promo. Traffic was there in GA—but zero keyword exposure in GSC. It wasn’t until I stripped the utm_medium param and ran manual test queries that I saw GSC wasn’t recognizing that variant as ranking *at all*.

What worked: explicitly registering the parameter in the URL Parameters section (annoying, old-school interface), and hard-rel=canonical’ing the base variant without any script-based overwrites. It’s a gnostic dance, but it stabilizes the data output.