Using Blog Analytics to Predict What Actually Gets Clicks

Breaking Down Click-Through Rates on Different Post Types

At some point I realized my “7 Tips to Fix X” posts were getting crushed by the dumbest thing I ever wrote: a half-rant about chromium memory leaks. Lower effort, practically no formatting, definitely not SEO’d — but the CTR was almost double. I thought it had to be a fluke or snippet manipulation, but nope. People just clicked more. So I started digging into actual AdSense report breakdowns and started tagging posts with dumb internal codes like [GUTIL] or [DEVLOG] just to track vibes.

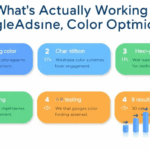

Turns out post type matters more than I expected. Not headline style — actual structural category. Here’s how my rough breakdown looked across content types with at least 50k impressions per month:

- Patch Notes or Tool Updates: High RPM but low CTR. People get there through subscriptions, not search or surf.

- Problem-Solution Posts: Medium on both, but weirdly steady. Evergreen traffic over months.

- Rants & Observations: Low RPMs, but absurd social CTRs. People email these around!

- Comparative Reviews: Best RPM by far. Not even close. But they’re slow starters.

- Checklist-style Tips: Full clickbait trap. They spike and plummet fast. High CTR, trash retention.

What weirdly shook me here? Google doesn’t account for post framing at all. They just throw the same ad logic at every post type and hope RPMs work out by scale. That’s a big dumb assumption when reader behavior diverges this sharply by content category.

AdSense Behavioral Gaps in Article-Type Recognition

So AdSense technically scans content to infer context. Cool. But it lumps way too many things together under “tech” or “web development” and often ignores article type completely. If you’ve ever noticed an ad for data brokers popping up on a post about DNS routing, you’ve seen it fail.

What’s worse: AdSense’s ML model seems to assume any post with code snippets is intent-heavy. Suddenly I had mortgage ads appearing next to a walkthrough on using cURL headers — like the reader was going to buy a house mid-debug. Ridiculous.

An accidental hint from the data cache:

“adContext: ‘financial_tech’ confidence=0.91”

This debug string showed up when I was watching pubads debug output in Chrome DevTools. That post had nothing to do with fintech — it was loosely referencing transactional emails. One word (“transaction”) pulled the whole thing off the rails. Likely due to keyword co-location mapping or historic performance on similarly phrased posts. There’s no API surfacing this logic. You only see this stuff while live-debugging network traffic or digging through GAM log streams.

The fix, in theory, is to use custom AdSense categories or wrap sensitive content differently. But nothing in the public AdSense interface lets you tune or even see which context was inferred for each article.

Ad Unit Performance Depends Heavily on Content History

Here’s the kicker nobody talks about: if you’ve historically hosted articles that underperformed — like, say, a bunch of shallow AI summaries during the GPT blog rush — those posts quietly tank your ad unit relevance index. It drags performance across everything else.

My own test: nuked 23 weak posts, most of them sitting around 100 daily views combined. After that, my homepage leaderboard ads tripled in eCPM within 2 weeks. Didn’t touch layout, size, targeting, or frequency. Just got rid of the fluff AdSense had learned to devalue.

There’s a vague feedback loop in effect: low-performing content teaches the ad system not to trust certain sections of your site. I haven’t seen that documented anywhere, but the trendline was brutally consistent across three sites once I started testing deletes and watching unit-level shifts.

The Dumbest Page Quality Error I’ve Ever Triggered

Six months ago I had a guide on fixing PageSpeed Insights errors break half the ad loading on mobile. Why? Because I dynamically inserted table-of-contents anchors using an async-loaded script after the first layout paint. That somehow flagged the whole page as uncertain layout shift, which in turn lowered its LCP score, which then flagged it internally as low quality in AdSense. Took two weeks for that to show up as a warning.

Even funnier, I found out completely by accident while refreshing Chrome Lighthouse results and seeing LCP go from sub-2s to over 5s. But nothing had changed in the CDN or content. Just that minor JS injection time bump.

This stuff matters because when your content is borderline in performance scores, AdSense will deprioritize ad loading timings. You might still get fill — but late, or after scroll — which annihilates your mobile revenue.

Using Search Console to Reverse-Engineer Article Decay

One specific trick that’s paid off: using Search Console’s query filter + date range to figure out how long a post keeps its peak. Most of mine hold stable CTR for 4–6 months before slipping. But then I noticed a few losing steam much faster — like under 30 days. Almost always these were trick posts: tools, installer quirks, copyright mentions. Once the microtrend faded, they got utterly buried.

But I also found a bunch of long-tail win posts — like simple, timeless stuff (“How to check .htaccess overrides”) that never trended, never broke 1000 views/day, but just coasted.

When I chart those against AdSense earnings, I started mapping post patterns into three buckets:

- Trend-dependent: big spikes, die fast. Worthwhile only if they link elsewhere.

- Resource-type: long tail, low maintenance. Great for filler inventory.

- Stayer-crankers: good CTR + RPM, all year. These are gold. Usually very narrow-topic and dry-sounding.

If you’re wondering how this affects content planning: I started using this breakdown to stop writing reaction posts and instead front-load slow-burn, stayer templates. It’s made me a little less relevant on Reddit, but my baseline RPM went way up.

Better Predicting Bad Fit Before Publishing

So here’s exactly how I now gauge whether something I’m writing will probably tank:

- Has the post topic already declined in Discover visibility?

- Will the core keywords show ads for SaaS or unprofitable installs?

- Is the content actionable without finishing the page?

- Am I referencing more than two external brands frequently?

- Would this post be interesting to a dev subreddit but not rank organically?

If it hits more than 2 of those? I skip it. I wasted a week on a VS Code extension roundup that hit all 5. CTR and RPM were awful, and bounce rate was north of 93%. Don’t try to save these with better phrasing. The core concept just doesn’t serve dual audiences.

Edge Case: JSON Snippets Sneaking Past Policy Filters

LOL so one of my ugliest little wins — and no, I haven’t repeated this — came when using long inline JSON-LD for a documentation schema block. Somehow, peppered into those key-value pairs, certain product names or references bypassed keyword-based ad suppression. One post referenced a semi-sensitive open-source fork that usually breaks monetization — but tucked in the JSON, it passed.

I’m not saying this is a feature. And I wouldn’t build a strategy around hiding words in structured data. But it suggests the content classification layer disregards a lot of page sections structurally — especially if it thinks those are part of spec compliance or markup metadata. Wouldn’t surprise me if it entirely skipped over <script type="application/ld+json"> in first-pass scans.

And yes, I know this is dumb. It was an accidental discovery while trying to get FAQ schema right. But still — if you ever need to reference something touchy without it tanking your ad index score, you’ll probably have better odds in structured data than body text.

The One Query That Changed My Content Model

This one was hiding in plain sight. I was going through AdSense Performance Reports → Platforms → Web and decided to correlate lifespan of Android Chrome traffic versus desktop Firefox. Wasn’t expecting much. What I found spelled it out in neon:

“Mobile readers convert early. Desktop readers convert late, but longer.”

I piped that into a spreadsheet, did some cohort comparisons, and yeah — it wasn’t even close. Mobile Android readers click more in week 1 but completely abandon longer posts, while Firefox desktop users trickle in slowly but have 2–3x the RPM after three months of publication. That changed how I structure posts: preview-heavy openings for mobile, anchor-based digging for desktop.

Also? AdSense has no built-in way to surface this connection. You have to jittertrack it yourself.

Anyway, that single insight made long-form publishing actually make money, not just pageviews. I basically stopped writing anything under 800 words after seeing that trend repeat five times across very different topics.