What Google SERP Features Actually Do to Your Rankings

Google SERP Features That Actually Change Click Behavior

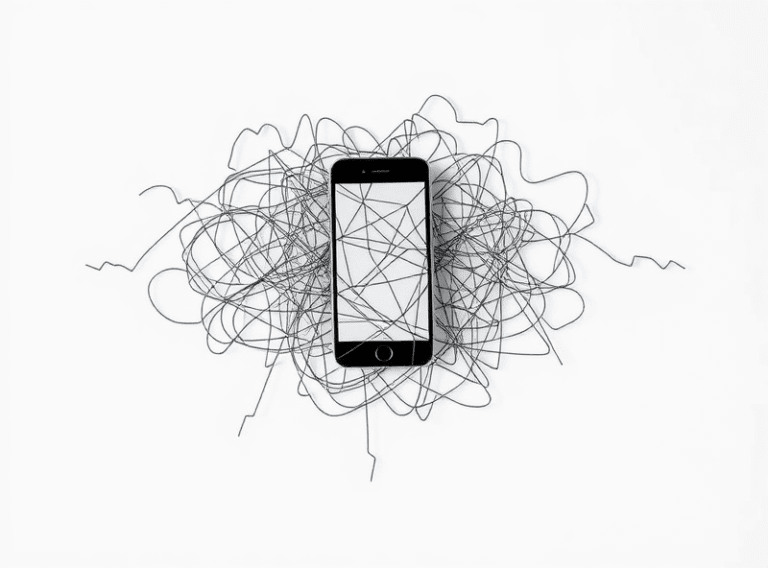

Rich snippets, featured snippets, knowledge panels, people also ask, image boxes — yeah, you’ve seen them, but that’s not the point. What matters is that they’re not decoration. Google didn’t sprinkle those in like glitter. They pull actual CTR weight, and sometimes derail the page-one game entirely.

Featured snippets, those little answer boxes, are the most misunderstood. A bunch of folks think they’re glory spots. Maybe — until you realize they often result in no actual click. The user reads the snippet and bounces before even seeing the site. Super fun when you finally snag one and your traffic dips. Honest-to-God happened to me in April: won a snippet for a niche query on a tools comparison site. Organic clicks dropped by like 30% the next day. Still top 3, still useful content, but nobody needed to click anymore.

On the flip side, image or video carousels can blast your impressions into low earth orbit, but good luck getting those to convert without killer markup and thumbnails that scream for attention. The actual SERP layout is part of your click funnel now, whether you want it or not. That means you have to deconstruct it like you’re debugging broken CSS. What lives above your result determines everything.

How Google Changes the Rules Based on User Intent

If you ever thought “well I rank #2, that’s great,” congrats, but also: maybe not. What that ranking means changes drastically based on whether the query triggers transactional, informational, or navigational layout logic.

For high-intent transactional keywords, Google loves to pile in ads, shopping carousels, and local packs. So your actual organic #2 result? Might be halfway down the fold, flanked by map tiles and product cards. You basically become page 1.8.

Ran into this with a small affiliate subdomain I was testing for router upgrades. Even when I hit position two for “best mesh wifi for apartments,” the visual dominance of Amazon links and YouTube reviews sucked all the juice. I eventually stopped tracking keyword rank and started tracking what actually shows up for that query — completely different game when you look at SERP visual stratification, not just positions.

When Google detects research-mode intent, it gets weirder. You’ll see People Also Ask boxes, sometimes embedded video timestamps, and the occasional Discover-style horizontal swipes, especially mobile. These produce weirdly inconsistent CTR despite stable rankings — which leads me to…

Why Rank Tracking Alone Can’t Predict Responsiveness

This one’s gonna sting: your #3 ranking might be way less valuable than someone else’s #7. Visibility is not linear. It’s shaped by the features Google injects around you. In a few cases, I’ve outranked Forbes and TechRadar but landed below their extended organization cards and pull quotes.

There’s also the completely undocumented but very real behavior where Google regroups SERP layout on mobile if user pogo-sticking is high. That means if someone clicks your result, backs out, and clicks another, the slots may silently reshuffle in later sessions. No indexing update — just live layout variation. It drove me absolutely mad until I captured a before-and-after mobile screen recording confirming it. Completely normal behavior for Google now. Zero communication about it from their docs.

Anyway, I’ve stopped relying purely on Ahrefs and SE Ranking screenshots. Instead, I snapshot SERPs in a browser emulator (Firefox mobile mostly) with real user agents and look at who’s actually visible inside 720px viewport. It ain’t always the folks in the top three.

Clickless Searches and the Disappearing Middle Pages

Clickless searches are the ghost town of SEO. They look fine in impression counts but generate exactly zero engagement. Think definitions, weather, even “how many teaspoons in a tablespoon” level stuff. If your content targets those, cool — but just know you’re writing for the AI overlay, not humans.

It gets worse with carousel-type features. Imagine ranking #9 — not bad, right? But then a ‘Top stories’ carousel pushes you below the fold, plus a ‘People Ask’ accordion expands on load. Congrats, you may as well be on page two.

“Page one” doesn’t mean the top anymore. It means “somewhere between the second and seventh thumb scroll.”

This is particularly brutal for mid-tier product pages that don’t qualify for product schema features but are still relevant. I had a sleep tracker review slip from #4 to #6, but after a medical info panel and a product comparison module appeared, my page was a ghost. GSC impressions still there. Clicks tanked by half.

Trying to Optimize for ‘People Also Ask’ Is a Trap

Well-intentioned SEOs will tell you to write answers in a Q&A style to “rank in People Also Ask.” Ok, but here’s what usually happens:

You get one spot in the accordion. Users open it, skim it maybe. Click-through rate? Below rock bottom. Your content is treated like a free API endpoint. You educate. Google gets credit. User never visits.

The messed-up part? Sometimes those accordions source really outdated content. I once had a blog post from 2017 show up in a PAA box in 2023. I hadn’t touched that post in years. The answer wasn’t even correct anymore — but it was still formatted neatly, so the AI loved it. Zero indication that it was stale information.

One workaround I tried: published more recent posts with a richer schema setup and buried the outdated post via noindex. Two weeks later, the PAA still showed the old one. Google cached it like a collector’s item.

Snippet Hijacks from Lower-Ranking Domains

Featured snippets aren’t always taken from the highest-ranking site for the query. In fact, I’ve seen them pulled from position 6 or 7 when the formatting fits better. That’s maddening if you’re doing everything right and still get leapfrogged by some forum post that happened to use a numbered list.

There’s a logic flaw I noticed when trying to debug this: Google’s AI prefers clarity over authority in snippets. So if you have pristine, authoritative content but a murky block of paragraph text, and some other guy has a janky blog with bullet points, guess who wins the snippet?

This one clicked for me after I inspected a snippet that I swear was ripped from some hobby site’s sidebar widget — a single sentence wrapped in <li></li> tags. Something about that made Google throw it into the box while ignoring my actual article explaining the same concept across four structured headings. Wild.

Log Analysis from SERP Feature-Heavy Pages

If you want to be even vaguely scientific about SERP behavior, check your logs or analytics and segment by SERP layout. I took a narrow slice of traffic from a long-running dev tutorial on push notifications and compared it by query path. The difference between versions with a People Also Ask box versus those with a knowledge panel bumped in was absurd.

With PAA only: about 9 clicks per hundred impressions.

With both PAA and knowledge panel: 4 clicks per hundred.

Same position. Same keyword. Pure difference was layout dilution.

Not widely published, but I cross-referenced this with a few findings from Moz and SEMrush articles that hinted at double-featured SERPs dropping CTR by half. I didn’t expect it to match that closely, but yup.

Realization here? You’re not just competing with other sites — you’re competing with Google UI elements. They don’t steal your rank, they steal your capacity.

Failed Experiments with Schema Bloating

At some point I got it into my head that more structured data must equal more results bling. Which… sort of worked.

I went back and added every schema flavor I could justify (FAQ, HowTo, Product, Review), layered microdata on top of existing JSON-LD, hoped for the best. I did all this on a headless CMS setup so I could spit out different schema blocks per request. Browser didn’t care. Google? Confused.

After two crawl cycles, my Product snippets disappeared entirely. The only thing that remained was a single FAQ toggle — and even that was inconsistent. Later I learned about a kind-of-undocumented behavior where rich results may be de-prioritized if Google detects mixed schema with redundant purpose.

Basically: don’t throw multiple schema types on one page unless they harmonize cleanly. I put HowTo on a page already labeled as Review. Guess how fast it got suppressed? Keyboard-mashing levels of regret.

Live Testing SERPs with Puppeteer and Custom User Agents

One of the most useful experiments I ran recently — and this only works if you’re a little unhinged — was loading SERPs using Puppeteer with mobile-first user agents while chaining proxies randomly through US nodes. This let me simulate a fairly real flood of mobile searchers from different locales.

Here’s what jumped out:

- Featured snippets rotated by geography even for English-only queries

- People Also Ask box loads differently depending on browser version (Chrome 118 shows three; Safari on iOS loads five by default)

- Local 3-packs sometimes expand into 6-packs in lower resolution emulations

- Video carousels vanished completely when spoofing a screen width under 320px

- One result used to show a better favicon on Firefox Android than Chrome desktop

Does this matter to everyone? Maybe not. But if you’re optimizing for a SERP that looks utterly different for half your users, yeah — it matters a lot.